If we can get over Microsoft’s cheesy catchphrase for a moment, the whole idea of “to the cloud” is actually pretty cool. It’s the promise of taking things that used to be both labour and capital intensive, commoditising them and serving them up on demand. This can very easily sound like PowerPoint presentation rhetoric so let’s move past the warm and fuzzies and actually see it in action.

A couple of weeks back I published a screencast as part of my 5 minute wonders series titled From zero to hero with AppHarbor. In that session I took my existing membership-enabled ASP.NET website (itself the subject of a previous 5 minute wonder), and literally sent it to the cloud courtesy of AppHarbor. So here we are 10 minutes later with a fully functional registration and log in enabled website under source control with continuous build and deployment. Oh, and it’s all been free.

But one of the great things about the promise of cloud-based services is that they can extend well beyond just app hosting. Microsoft’s Office 365 is just one example of software as a service using the cloud as a delivery channel and there are many more appearing every day. But today, I want to look at something a little closer to web developers’ hearts; Blitz.

What is Blitz and why does ASafaWeb need it?

Blitz is simply a load testing service and AppHarbor has been kind enough to work with them and introduce it as an add-on in their catalogue. Blitz does exist in its own right independently of AppHarbor, it’s just that they’ve made it really easy to consume the service and run it against existing apps.

The reason this is important for ASafaWeb is that it’s the kind of site that, well, isn’t going to be real scalable. I say that simply because the entire rationale of ASafaWeb is to sit there requesting resources from other websites which means holding open HTTP connections and waiting for responses from sites which could be anywhere. As of today, a single scan usually includes four or five HTTP requests and takes a couple of seconds to run. However, it can take a whole lot longer than that depending on how quickly it can get a response.

What I decided to do with ASafaWeb is put absolutely zero upfront effort into performance optimisation. I don’t mean I intentionally built it to be slow, I just mean that I built each feature with the lowest possible effort for it to be functional. Part of the reason was that I wanted to put Blitz through its paces and actually quantify the performance gains some tuning measures I have in mind make. So let’s get on with the show.

Let the Blitzing begin!

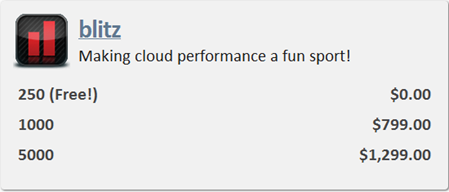

Blitz just sits on the AppHarbor add-ons page and like pretty much everything about AppHarbor, you can get into it for free:

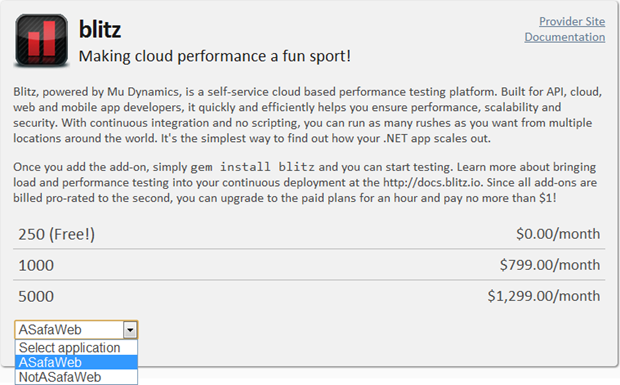

The free test gives us a load simulation of 250 concurrent users which is heaps for ASafaWeb. The first thing you need to do when selecting the service is to choose which app to run it against. I have a couple in AppHarbor but today it’s going to be run against ASafaWeb:

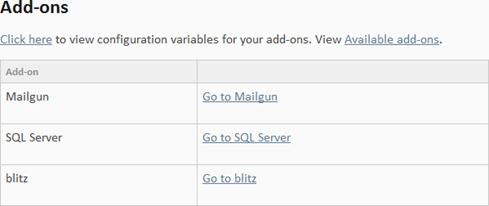

After adding Blitz it appears as just another add-on for the app:

Once we actually go off to Blitz we end up on their website but wrapped in AppHarbor nav with the ASafaWeb app listed just below that. Oh, and we’re prompted to “Play!”; sounds fun:

Once we start playing we get into the semantics of how Blitz runs. Firstly there’s the concept of a “sprint”. Think of a sprint as a single runner putting your site through its paces. Then there’s a “rush” which is basically a whole bunch of sprints thrown against the site in a coordinated fashion. This is where the load gets generated.

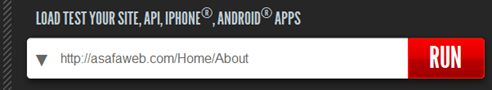

Kicking off a sprint is as simple as plugging in a URL. I want to get a feel for how the app performs when it’s not doing anything labour intensive so let’s kick off with the ”About” page. ASafaWeb is an ASP.NET MVC 3 app and this page is nothing more than a controller directly returning a static view with no other processing going on so it should be super fast.

Running the sprint results in a little bit of processing followed by a rundown of the response received from the site:

What’s important though is the command line right up the top. Clicking on this guy fires off the rush which is where all the excitement begins. What we get now – and it doesn’t translate well to static images on a blog – is a graph that updates in five second intervals. Once it all runs, it looks something like this:

Imagine that each one of the points in the first graph appears in real time in five second intervals. It’s plotted against the time in the rush on the X axis and the time it takes the page to respond on the Y axis. The diagonal grey line is the number of requests issued per second and as you can see, it scales from zero up to two hundred and fifty over the duration of the sixty second test.

The second graph simply reports the hits per second which naturally aligns pretty well with the number of users. In other words, ASafaWeb’s “About” page is able to successfully return results at the same rate requests are coming in right up to the maximum 250 per second. What all this is telling us is that as users scale up, ASafaWeb has absolutely no trouble in meeting the demand for the page. No surprises there.

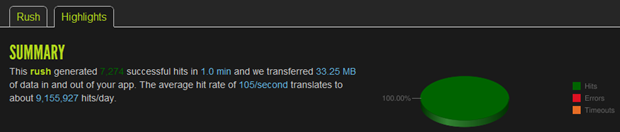

Over on the “Highlights” tab, we get a little more info but again, it’s all good news:

Pretty impressive stats for a free service – 7,272 requests in only one minute! Now let’s make it interesting.

Loading up ASafaWeb scans

ASafaWeb enables GET requests to execute scans by constructing the URL with the site to be scanned. It means a request to scan a site such as isnot.asafaweb.com (the dummy vulnerable site I use for testing), looks like this: http://asafaweb.com/Scan?Url=isnot.asafaweb.com

The thing is though, each scan ASafaWeb runs actually result in numerous HTTP requests to the target website. Consequently, the scan above means 4 requests which consume a total of about 11KB and takes around a second to execute. This is pretty quick because it’s scanning another site on AppHarbor and there’s basically no content on it (that’s 11KB across four separate responses), but scan a normal website in, say, Australia and those numbers quickly explode. Think more like an order of magnitude higher.

By default, Blitz will time out one second after issuing a request which simply won’t work for ASafaWeb due to no fault of its own. Fortunately we can customise the runner to take a timeout command so combining that with the default values gives us something more like this:

-p 1-250:60 -T 10000 http://asafaweb.com/Scan?Url=…

Just one more thing though; we don’t have to scale up to 250 consecutive users and indeed this puts one hell of a load on the scanning engine in ASafaWeb. Through some trial and error combined with a “reasonable expectation” of what I thought the site should initially support, I settled on 50 concurrent users. This might not sound like a lot, but keep in mind it means 50 concurrent scans all executing at the same time. To demonstrate where things start to fail, I tweaked the scan a little to run up to 100 users as follows:

-p 1-100:60 -T 10000 http://asafaweb.com/Scan?Url=…

So it’s just that first switch. Let’s run it up:

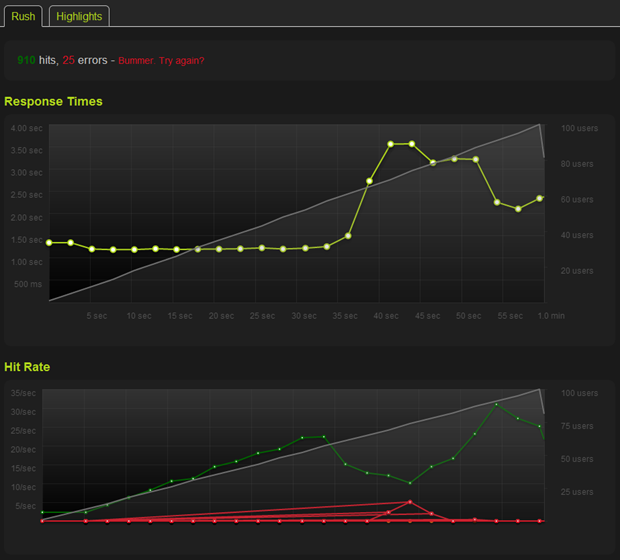

Firstly, these results are highly variable simply because there are so many different things happening. You’ve got Blitz talking to ASafaWeb running on shared infrastructure which is then making a bunch of requests off to the other side of the world against a server which then has its own overheads and variances in the way it responds. The result above is simply one of the more consistent, cleaner looking ones which make interpretation a little easier.

The initial graph shows everything looking pretty stable up until about the 35 second mark. This scan should take about 1 to 1.5 seconds to execute and we can see that happening cleanly by virtue of each of the points on the yellow line being quite consistent for the first half of the test. Looking at the diagonal grey line which illustrates the number of concurrent users, it’s at about 60 users that things start to change.

The first thing we notice at the 60 user mark is that the response times increase. More load simply equals a longer duration for the app to do its work and return a response. The graph below then tells us that this is also affecting our throughput in terms of the number of hits the app is able to server per second. The green line shows it happily serving up to about 23 in a second after which it actually decreases. Why? The app has clearly exhausted its resources and as we know from the top graph, it’s having trouble responding in the usual amount of time; longer response times equal less hits per second. In fact we’ve effectively just performed a little denial of service attack on ASafaWeb.

The other thing we see in the bottom graph is the red error line. We really don’t want this guy because it means the server is effectively saying “That’s it – time out!” Not “time out” as in it hasn’t responded within ten seconds, “time out” as in its responded and kindly advised it can’t serve the request. “Resource exhaustion” as Blitz refers to it.

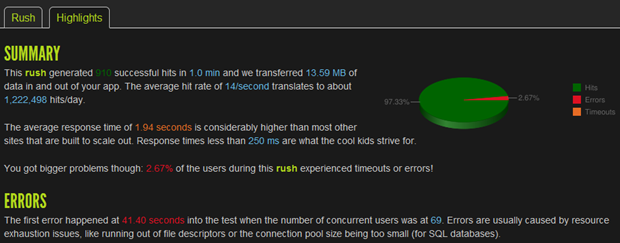

Finally, we get a rundown on the whole scenario via the “Highlights” tab. As we can see here, we got up to 69 concurrent users before that first error occurred at nearly the 42 second mark:

Not too bad, given what it’s doing, but I think we can do better…

Scaling up, courtesy of AppHarbor

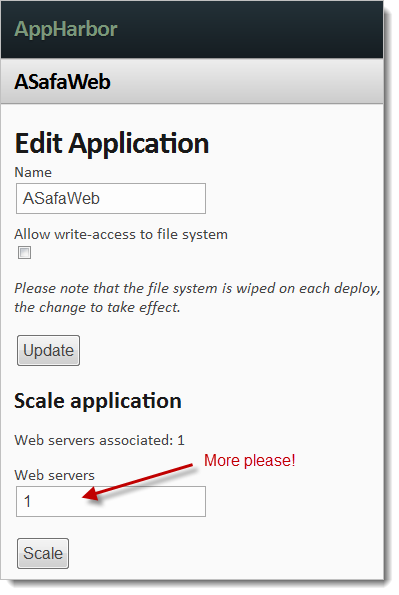

The promise of the cloud is that everything is commoditised; pay for what you use, add more when you need it and do it all on-demand. Such is the AppHarbor promise and it couldn’t be easier:

For now, AppHarbor are offering scaling up to an additional server free of charge which is rather convenient. What this means is that ASafaWeb can scale up to a much loftier height at the click of a button. Let’s run that Blitz scan again now that we’ve doubled the servers:

Given all the variances which enter into one of these scans, this is a very linear result. Ok, there’s one error but we’re talking 0.08% failure rate now rather than 2.67% with only the single server so that’s a pretty fundamental difference. Put it another way, we’ve just scaled out the app such that the error rate due to load has reduced by 97%, and all we did was to click a button.

Summary

This is a good place to reflect on that cloud value proposition; the ASafaWeb code could be improved a lot, no doubt about it. I could spend some time analysing it or even pay someone smarter than me to do it and I’m sure, with sufficient effort, it would perform better than it does today. Alternatively, I can just add scale to the infrastructure. Of course this would normally come at a cost, but for a business, the temptation just to whip out the credit card and instantly add performance is very significant indeed.

The Blitz cloud offering is also very attractive. It wasn’t that long ago that load testing meant running some tricky software and trying to simulate load by standing up multiple “bots” to distribute requests across an orchestrated collection of machines. It wasn’t hard, but it took some effort to configure and then analyse. I don’t know what sort of back-end Blitz runs and the great thing about the cloud paradigm is that I don’t care. They offer a service. It works. End of story.

And that’s really exactly what we expect from this “cloud” concept which is thrown around so haphazardly today; we want something we can turn on when we want it, scale it up whenever we feel like it and not give a damn about the mechanics which make it all work. This is the promise of cloud delivered right into our hands by AppHarbor. For free.