No matter how much anyone tries to sugar coat it, a service like Have I been pwned (HIBP) which deals with billions of records hacked out of other peoples' systems is always going to sit in a grey area. There are degrees, of course; at one end of the spectrum you have the likes of Microsoft and Amazon using data breaches to better protect their customers' accounts. At the other end, there's services like the now defunct LeakedSource who happily sold our personal data (including mine) to anyone willing to pay a few bucks for it.

As far as intent goes, HIBP sits at the white end of the scale, as far to that extreme as I can possibly position it. It's one of many things I do in the security space alongside online training, conference talks, in-person workshops and of course writing this blog. All of these activities focus on how we can make security on the web better; increasing awareness, reducing the likelihood of a successful attack and minimising the damage if it happens. HIBP was always intended to amplify those efforts and indeed it has helped me do that enormously.

What I want to talk about here today is why I've made many of the decisions I have regarding the implementation of HIBP. This post hasn't been prompted by any single event, rather it seeks to address questions I regularly see coming up. I want to explain my thinking, explore why I've made many of the decisions I have and invite people to contribute comments with a hope of making it a more useful system for everyone.

The Accessibility of a Publicly Searchable System

The foremost question that comes up as it relates to privacy is "why make the system publicly searchable?" There are both human and technical reasons for this and I want to start with the former.

Returning an immediate answer to someone who literally asks the question "have I been pwned?" is enormously powerful. The immediacy of the response addresses a question that's clearly important to them at that very moment and from a user experience perspective, you simply cannot beat it.

The value in the UX of this model has significantly contributed to the growth of the service and as such, the awareness its raised. A great example is when you see someone take another person through the experience: "here, you just enter your email address and... whoa!" The penny suddenly drops that data breaches are a serious thing and thus begins the discussion about password strength and reuse, multi-step verification and other fundamental account management hygiene issues.

The fact that someone can search for someone who is not them is a double-edged sword; the privacy risk is obvious in that you may discover someone was in a particular data breach and then use that information to somehow disadvantage them. However, people also extensively use the service to help protect other people, for example by identifying exposed spouses, friends, relatives or even customers and advising them accordingly.

I heard a perfect example of this just the other day when speaking to a security bod in a bank. He explained how HIBP was used when communicating with customers who'd suffered an account takeover. By highlighting that they'd appeared in a breach such as LinkedIn, they are able to help the customer understand the potential source of the compromise. Without being able to publicly locate that customer in HIBP, it would be a much less feasible proposition for the bank.

I mitigate the risk of public discoverability adversely impacting someone by flagging certain breaches as "sensitive" and excluding them from publicly visible results. This concept came in when the Ashley Madison data hit and the only way to see if you're in that data breach (or any other that poses a higher risk of disadvantaging someone) is to receive an email to the searched address and click on a unique link (I'll come back to why I don't do that for all searches in a moment).

I've actually had many people suggest that it's ok to show the sensitive results I'm presently returning privately because the privacy of these individuals has already been compromised due to the original breach. I don't like this argument and the main reason is because I don't believe the act of someone else having illegally broken into another system means the victims of that breach should be exposed further in ways that would likely disadvantage them. It's not the only time I've heard this, for example after launching Pwned Passwords last month a number of people said "you should just return email address and password pairs because their data is out there anyway". Shortly after that, I was told I'm "holding people hostage" by not providing the passwords for compromised email addresses. In fact, I had someone get quite irate about that after loading the Onliner Spambot data with the bloke in question then proceeding to claim that not disclosing it wasn't protecting anyone. Someone else suggested I was "too old fashioned and diplomatic". No! These all present a significantly greater risk for those individuals and for someone who himself is in HIBP a dozen times now, I'd be pretty upset if I saw any of this happening.

Searching by Email Verification is Fraught with Problems

This is the alternative I most frequently hear – "just email the results". There are many reasons why this is problematic and I've already touched on the first above: the UX is terrible. There's no immediate response and instead you're stuck waiting for an email to arrive. Now you may argue that a short wait is worth the trade-off, but there's much more to it than that.

HIBP gets shared and used constructively in all sorts of environments that depend on an immediate response. For example, it gets a huge amount of press and a search is regularly shown in news pieces. Many people (particularly in the infosec community) use it at conference talks and they're not about to go opening up their personal email to show a result.

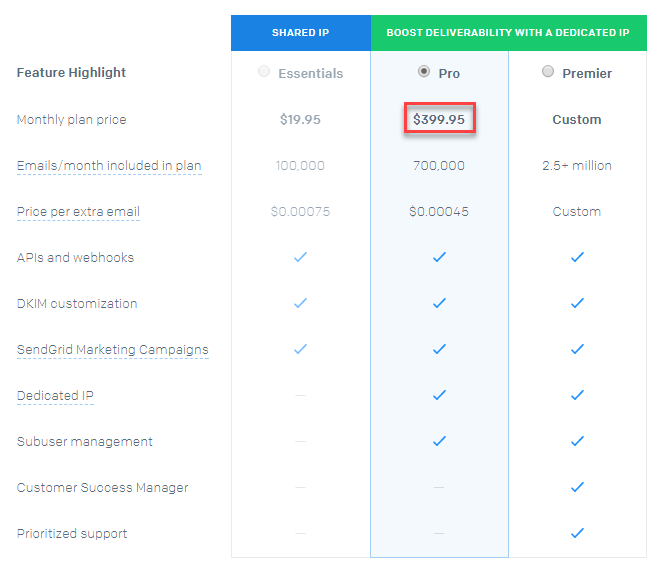

But those are arguments in favour of accessibility and I appreciate not everyone will agree with them so let's move onto hard technical challenges and the first is delivering email. It's very hard. In all honesty, the single most difficult (and sometimes the single most expensive) part of running this service is delivering mail and doing it reliably. Let's start there actually - here's the cost of sending 700k emails via SendGrid:

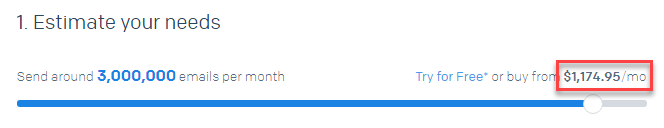

Now fortunately, SendGrid helps support the project so I don't end up wearing that cost but you can see the problem. Let's just put the challenge of sending an email on every search in context for a moment: a few weeks ago, I had 2.8 million unique visitors in just one day after making the aforementioned 711 million record Onliner Spambot dump searchable. Each one of those people did at least one search and if I was to pay for that volume, here's what I'd be looking at:

That's one day of traffic. I can't run a free service that way and I hate to think of the discussion I'd be having with my wife if I did! Now that was one exceptional day but even in low periods I'm still talking about many millions of visitors a month. As it is, I'm coming very close to maxing out my email allocation each month just from sending verification emails and notifications when I load breaches. And no, a cheaper service like Amazon SES is not a viable alternative, I've been down that path before and it was a debacle for many reasons plus would still get very pricey. (Incidentally, large volumes of emails in a spike often causes delivery to be throttled which would further compound the UX problem of people waiting for a search result to land.)

And then there's the deliverability problems. One of the single hardest challenges I have is reliably getting mail through to people's inbox. Here's what my mail setup looks like in terms of spam friendliness:

DKIM is good. SPF is good. I have a dedicated IP. I'm not on any black lists. Everything checks out fine yet consistently, I hear people say "your notification went to junk". I suspect it's due to the abnormal sending patterns of HIBP, namely that when I load a breach there's a sudden massive spike of emails sent but even then, it's only ever to HIBP subscribers who've successfully double-opted-in. So, think of what that would mean in terms of using email as the sole channel for sharing breach exposure: a heap of people are simply going to miss out. They won't know they were exposed in a breach, they won't adapt their behaviour and for them, HIBP becomes useless.

I've seen criticism from other services attempting to do similar things to HIBP based on the fact I'm not just sending emails to answer that "have I been pwned?" question. But they're at a very different stage of maturity and popularity and simply don't have these challenges – it'd be a lot easier if I was only sending hundreds of emails a day and not tens or sometimes even hundreds of thousands. They're also often well-funded and commercialise their visitors so you can see why they may not understand the unique challenges I face with HIBP.

In short, this is the best possible middle ground I can find. Not everyone agrees with it, but I hope that even the folks who don't can see it's a reasoned, well thought out conclusion.

Because I Don't Want Your Email Address

There are a number of different services out there which offer the ability to identify various places your data has been spread across the web. It's a similar deal to HIBP insofar as you enter an email address to begin the search, but many then promise to "get back to you" with results. Of course, during this time, they retain your address. How long do they retain it for? Well...

Someone directed me to Experian's "Dark Web Email Scan" service just recently. I had the feeling just from reading the front page that there was more going on than meets the eye so I took a look at the policies they link to and that (in theory) you must read and agree to before proceeding:

It's stunts like @Experian is pulling that erode trust in these companies: that's 21,494 words you need to agree to: https://t.co/ulsAKGv6D8 pic.twitter.com/k6GPAGZqPu

— Troy Hunt (@troyhunt) September 9, 2017

Folks who've actually read all this have subsequently pointed out that as expected, providing your address in this way now opts you into all sorts of things you really don't want. In fact, I saw it myself first hand:

Geez the Experian "dark web search" is terrible: several days to get a result, useless info in the report and 2 subsequent spam mails since pic.twitter.com/ccFEbZStxq

— Troy Hunt (@troyhunt) September 13, 2017

In other words, the service is a marketing funnel. The premise of "just leave us with your address and we'll get back to you" is often a thin disguise to build up a list of potential customers. Part of the beauty of HIBP returning results immediately is that the searched address never goes into a database. The only time this happens is when the user explicitly opts in to the notification service in which case I obviously need the address in order to contact them later should they appear in a new data breach. It's data minimisation to the fullest extent I can; I don't want anything I don't absolutely, positively need.

Incidentally, by Experian not explicitly identifying the site the breach occurred on it makes it extremely difficult for people to actually action the report. They're not the only ones - I've seen other services do this too - and it leaves the user thinking "what the hell do I do now?!" I know this because it's precisely the feedback I had after loading the Onliner Spambot data I mentioned earlier, the difference being that I simply didn't know with any degree of confidence where that data originated from. But when I do, I tell people - it's just the right thing to do.

The API Is an Important Part of the Ecosystem

One of the best things I did very early on in terms of making the service accessible to a broad range of people was to publish an API. In additional to that, I list a number of the consumers of the service and they've done some great things with it. There are many other very good use cases you won't see publicly listed and that I can't talk about here, but you can imagine the types of positive implementations ingenious people have come up with.

In many cases, the API has enabled people to do great things for awareness. For example, this implementation at a user group I spoke at in the Netherlands recently (and yes, opt-in was optional):

So @ordina set up facial recognition via photos uploaded at registration & checked people against @haveibeenpwned on arrival #TroyAtOrdina pic.twitter.com/3C1gFA4Q4t

— Troy Hunt (@troyhunt) July 5, 2017

The very nature of having an API that can search breaches in this fashion means the data has to be publicly searchable. Even if I put API keys on the thing, I'd then have the challenge of working out who I can issue them to then policing their use of the service. For all the reasons APIs make sense for other software projects, they make sense for HIBP.

Now, having said all that, the API has had to evolve over time. Last year I introduced a rate limit after seeing usage patterns that were not in keeping with ethical use of the service. As a result, one IP can now only make a request every 1.5 seconds and anything over that is blocked. Keep it up and the IP is presented with a JavaScript challenge at Cloudflare for 24 hours. Yes, you can still run a lot of searches but instead of 40k a minute as I was often seeing from a single IP, we're down to 40. In other words, the worst-case scenario is only one one-thousandth of what it previously was. What that's done is forced those seeking to abuse the system to seek the data out from other places as the effectiveness of using HIBP has plummeted.

Like many of the decisions I've made to protect individuals who end up in HIBP, this one has also garnered me criticism. Very often I feel like I'm damned if I do and I'm damned if I don't; some people were unhappy in this case because it made some of the things they used to do suddenly infeasible. Yes, it slashed malicious use but you can also see how it could impact legitimate use of the API too. I'm never going to be able to make everyone happy with these decisions, I just have to do my best and continue trying to strike the right balance.

I'm Still Adamant About Not Sharing Passwords Attached to Email Addresses

A perfect example of where I simply don't see eye to eye with some folks is sharing passwords attached to email addresses. I've maintained since day 1 that this poses many risks and indeed there are many logistical problems with actually doing this, not least of which is the increasing use of stronger hashing algorithms in the source data breaches.

Not everyone has the same tolerance to risk in this regard. I mentioned earlier how some especially shady services will provide your personal data to anyone else willing to pay; passwords, birth dates, sexualities - it's all up for grabs. Others will email either the full password or a masked portion of it, both of which significantly increase the risk to the owner of that password should that email be obtained by a nefarious party.

I've tried to tackle the gap between providing a full set of credentials and only the email address by launching the Pwned Passwords service last month. Whilst the primary motivation here was to provide organisations with a means of identifying at-risk passwords during signup, it also helps individuals directly impacted by data breaches; find both your email address and a password you've used before on HIBP and that's a pretty solid sign you want to revisit your account management hygiene.

At the end of the day, no matter how well I was to implement a solution that attached email addresses to other classes of personal data, there's simply no arguing with the basic premise of I cannot lose what I do not have. I have to feel comfortable with the balance I strike in terms of how I handle this data and at present, that means not putting it online.

There Are Still a Lot of Personal Judgement Calls

I've been asked a few times now what the process for flagging a breach as sensitive is and the answer is simply this: I make a personal judgement call. I have to look at the nature of the service and question what the impact would be if HIBP was used as a vector to discover if someone has an account on that site. I don't always get this right; I didn't originally flag the Fur Affinity breach as sensitive because I didn't understand how furries can be perceive until someone explained it to me. (For the curious, it's the sexual aspects of furries that came as news to me.)

HIBP is a constant series of judgement calls when it comes to the ethics of running the service. The data I should and should not load is another example. I didn't load the Australian Red Cross Blood Service breach because we managed to clean up all known copies of it (there are multiple reasons why I'm confident in that statement) and they committed to promptly notifying all impacted parties which they summarily did. I removed the VTech data breach because it gave parents peace of mind that data relating to kids was removed from all known locations. In both those cases, it was a judgement call made entirely of my own free volition; there were no threats of any kind, it was just the right thing to do.

HIBP is not about trying to maximise the data in the system, it's about helping people and organisations deal with serious criminal acts. Frankly, the best possible outcome would be for there to be no more breaches to load. This is what all my courses, workshops, conference talks and indeed hundreds of blog posts are trying to drive us towards – fixing the problems that have led to data breach search services being a thing in the first place. Not everyone has those same motives though, and that's leading us to some pretty shady practices.

The "No Shady Practices" Rule

As I said in the intro, there's no sugar-coating the fact that handling data breaches is always going to sit in a grey area. This makes it enormously important that every possible measure is taken to avoid any behaviour whatsoever that could be construed as shady. It probably shouldn't surprise anyone, but this is not a broadly held belief amongst those dealing with this class of data.

I mentioned LeakedSource earlier on; there are still multiple sites following the same business model of "give us a few bucks and we'll give you other people's data". There's a total disregard not just for the privacy of people like you and I, but for the impact it can then have on our lives. People bought access to my own data – I know this because someone once sent it to me! Many of these services operate with impunity under the assumption that they're anonymous; great lengths are gone to in order to obfuscate and shield the identity of the operators although as we saw with Leaked Source, anonymity can be fleeting.

There are also multiple organisations paying for data breaches. What this leads to is criminal incentivisation; rewarding someone for breaking into a system and pilfering the data in no way improves the very problem these services set out to address. Mind you, the argument could be made that the purpose these services primarily serve is to be profitable and viewed in that light, paying for data and then charging for access to it probably makes sense from an ROI perspective. I've never paid for data and I never intend to and yes, that means that it sometimes takes longer for it to appear on HIBP, but it's the right thing to do.

Ambulance chasing is another behaviour that's well and truly into the dark end of shady. I recently had a bunch of people contact me after an organisation emailed them to advise that addresses from their company were found in a breach. Then I watched just last month as someone representing another org hijacked Twitter threads mentioning HIBP in order to promote their own service (I then had to explain what was wrong with this practice, something I later highlighted in another thread). In all these cases, financial incentive either from directly monetising the service itself or indirectly promoting other services associated to the organisation appear to be the driver for shady practices.

We should all be beyond reproach when handling this data.

Summary

Being completely honest, it would have to be less than one in one thousand pieces of feedback I get that are critical or even the least bit concerned about the HIBP model as it stands today. It's a very rare thing and that may make you wonder why I even bothered writing this in the first place, but the truth is that it helped me get a few things straight in my own head whilst also providing a reference point for those who do express genuine concern.

HIBP remains a service that first and foremost serves to further ethical objectives. This primarily means raising awareness of the impact data breaches are having and helping those of us that have been stung by them to recover from the event. Even as I've built out commercial services for organisations that have requested them, you won't find a single reference to this on this site; there's no "products" or "pricing", no up-sell, no financial model for consumers, no withholding of information in an attempt to commercialise it, no shitty terms and conditions that you have to read before searching and not even any advertising or sponsorship. All of this is simply because I don't want anything detracting from that original objective I set forth.

I'll close this post out by saying that there will almost certainly be changes to this in the future. Indeed, it's constantly changed already; sensitive breaches, rate limits and the removal of the pastes listing are all examples of where I've stepped back, looked at the system and thought "this needs to be done better". Very often, that decision has come from community feedback and I'd like to welcome more of that in the comments below. Thank you for reading.