There’s been a huge amount of activity on Have I been pwned? (HIBP) in recent weeks, particularly in the wake of the Adult Friend Finder breach which drew a lot of attention. The activity has comprised of organic browser-based traffic as well hits to the API. The latter in particular is interesting as you can see a steady rate of traffic (or a steady increase of traffic) suddenly interrupted by a sudden and massive increase which then sits at a threshold for a period of time. Sometimes that’s minutes, sometimes it’s even days.

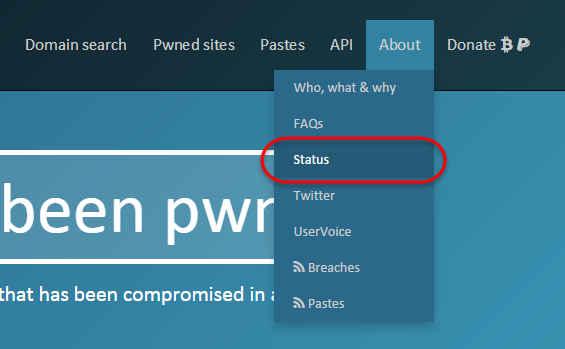

I often get asked “I want to hit your API but I don’t want to disrupt the service – is that ok?” and I’m always just slightly amused as the site services ridiculous levels without breaking a sweat. And then it scales out ten times over! To date, scale has never even been close to a problem which is great, but I wanted to add even further transparency to the service (beyond the extensive blog posts I’ve written, that is), and share my New Relic stats with the world. These are live stats – real time stats – and they’re now accessible here:

I’m going to list them each below as live embedded charts because firstly, it will allow me to explain how to interpret them and secondly, it will mean there’s a location entirely independent of the HIBP website where people can see what the service is doing. If they can’t access the site itself, they can always come here to this blog post and see what’s going on. Oh – and thirdly, it means that if you’re hitting the API then you can see the traffic you’re causing and how the system is performing as a result. All nice and transparent :)

What’s happening now?

This chart shows requests for the last 30 minutes which is the smallest increment of time New Relic supports:

It’s a great indication of how much load the system is currently under as measured by requests per minute. This is not page views or users but rather the individual requests to the site. These requests comprise of things like web pages, images, CSS and of course, hits to the API. It’s the API hits in particular where you’ll see crazy spikes in traffic as a consumer ramps up and starts pulling masses of data. You’ll recognise API traffic by the (usually) flat throughput as the consumer reaches its maximum potential whether that be bandwidth or back end processing or something else. Certainly it’s not HIBP’s maximum potential (although I’ve yet to find that so it’s theoretically possible that you might see that one day…)

How is HIBP performing now?

When that load in the aforementioned graph really spikes, what does that do to the site performance? This graph gives the answer over the same time period as the previous one (30 mins) so you can directly compare load with performance:

There are a couple of interesting things you’ll observe here: One is that the MSSQL component is almost non-existent. There’s no relational database hit when you search for a breach (although it does cache metadata about the breach on a 5 minute refresh cycle), the DB generally only gets hit when users subscribe to the free notification service or perform actions like a domain wide search. The other interesting thing is that the average response time usually goes down under heavy load. This is because load is often driven by API hits and searching through a couple of hundred million rows in Azure Table Storage is actually much more efficient than, say, sending an email via SMTP which is what the notification service does (you need to verify your email address). What it means is that the average load time comes way down when the proportion of fast requests goes up.

What's happened over the last week?

This is just the first graph but over a one week period:

I wanted to include this to show just how much load fluctuates over time. You’ll usually see erratic spikes and throughs (certainly that’s the status right now) as there are periods where the API is hammered then load backs off. It’s a good example of how the service needs to respond to vastly different traffic patterns over time. It’ll also show you if there’s been any complete outages over this period.

Edit: When I began seeing volumes of DDoS traffic against the site later in 2016, I removed this report for fear it was helping gauge the success of the attacks. I may reinstate them later on but for the moment, I'm keeping them offline.