Only a couple of months ago, I did a talk titled "The Responsibility of Disclosure: Playing Nice and Staying Out of Prison". The basic premise was to illustrate where folks finding security vulnerabilities often go wrong in their handling of the reporting, but I also wanted to show how organisations frequently make it very difficult to responsibly disclose the issue in the first place. Just for context, I suggest watching a few minutes of the talk from the point at which I've set the video below to start:

Time and time again, I run into incidents where good people hit brick walls when trying to do the right thing. For example, just this weekend I had a Twitter follower reach out via DM looking for advice on how to proceed with a risk he'd discovered when signing up to Kids Pass in the UK, a service designed to give families discounts in various locations across the country. What he'd found was the simplest of issues and one which is very well known - insecure direct object references. In fact, that link shows it's number 4 in the top 10 web application security risks and it's so high because it's easy to detect and easy to exploit. How easy? Well, can you count? Good, you can hack! Because that's all it amounted to, simply changing a short number in the URL. You look at the address bar of a page you've legitimately arrived at, and 1 to the number there then wammo! You've got someone else's data.

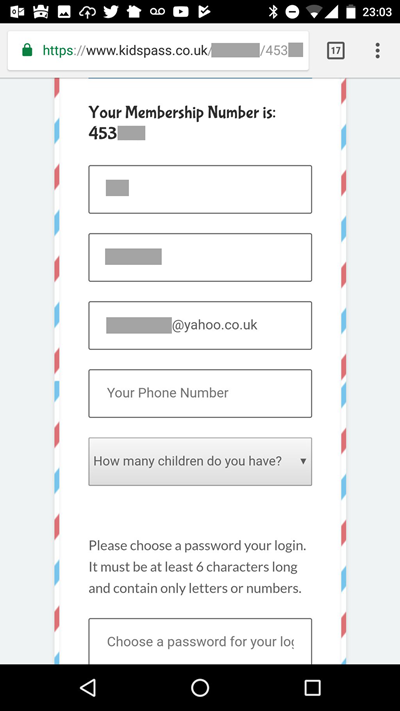

He included a sample that illustrated the problem:

I've obfuscated part of the URL because to the best of my knowledge, this still isn't fixed. The fields I've blanked out contain first name, last name and obviously email address and they all belong to someone other than the guy who reported it to me. My advice from here was very simple as there was really only one thing to do:

Get in touch with them, and definitely don't access any other data from here, it's pretty clear there's a flaw now

And then, just to be sure the issue got traction, I quote-tweeted an earlier message he'd sent them and called for some help:

Any UK followers have a contact at @KidsPass? I've seen this, it's not pretty. https://t.co/GkTJO4oE3z

— Troy Hunt (@troyhunt) July 29, 2017

Job done, good deed completed, everyone will go away happy, right? Except that a mere 11 hours later, Alex (whose name I'm using with his permission, plus of course he's publicly tweeted them from his account anyway) discovered that Kids Pass wasn't, well, real happy to have heard from him:

Whoa! What?! Why? He also sent me a copy of the DMs he'd sent them and they were quite reasonable, certainly they didn't read like he was trying to stitch them up plus as you can see from the earlier embedded tweet, he looks like a perfectly normal, nice guy. He doesn't even have a hoodie! I immediately got ready to share this unfolding story via Twitter and ask (again) for help, but went to take another quick look at their profile first. And that's when I saw it:

They'd blocked me too! Why on earth would they do this?! Literally the only tweet I'd sent was the one embedded above and for that I was blocked. I hadn't even sent them a DM so why they purely wanted to keep me from viewing their timeline whilst logged into Twitter as myself was a total mystery. Somehow, I was guilty by association.

Right about here you should be getting a sense of why I've mentioned the difficulty of responsible disclosure in the title of this post. This is probably the simplest most ethical example I could think of when it comes to doing the right thing by a company that is clearly doing the wrong thing (or at least their code is doing the wrong thing), yet here we were, both Alex and I blocked from any further communications. But if they thought that would stop people from trying to help them, they had another thing coming:

UK friends - can someone get in touch with these clowns? They have a serious vuln and are blocking anyone who tries to contact them. pic.twitter.com/tQLf1nugLj

— Troy Hunt (@troyhunt) July 30, 2017

You can read through the barrage of responses that followed by drilling down on that thread and even I was a bit surprised with the traction it got, especially for a Sunday when things are normally a little quiet on Twitter. Shortly after things started to light up on their mentions, they unblocked me and DM'd to the effect that "our IT department is looking into it". Thing is, as far as I know they don't even know what they're looking into because they never asked me! They also didn't ask Alex who never provided details in his original DMs. So here you've got two people saying "you've got a serious risk leaking customer details" and they don't even want to know what it is! But they're looking into it anyway, apparently.

However, there's another way they might know where to look:

Hey @KidsPass - when do you plan on doing anything about the massive security issues with your website? https://t.co/CO447jmJoK

— Jem (@jemjabella) July 11, 2017

This was a few weeks ago and it was brought to my attention when the author of that blog post, Gareth Griffiths (Gaz), reached out after seeing my earlier tweets. The original premise of that blog post was to call them to account for a practice that was brought to their attention all the way back in December:

@KidsPass You just sent me a password reminder with my password in plain text - I hope you're not storing it in plain text. #security

— Gareth Griffiths (@gazchap) December 26, 2016

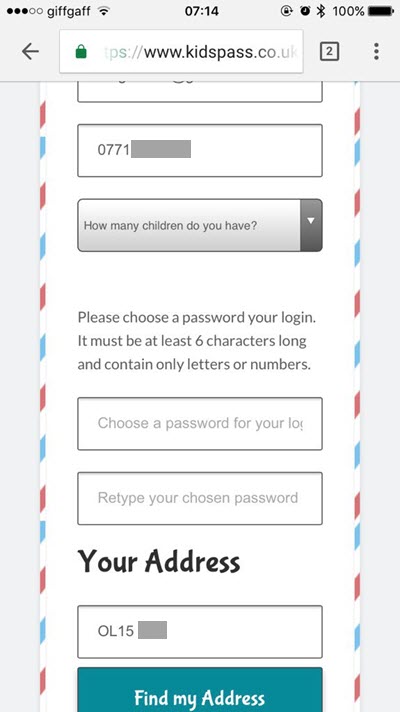

It's becoming quite rare these days to see a site email you your password (which also indicates they're storing it inappropriately in a reversible format), but here they were, sending them around with reckless abandon. However, while writing the post Gaz found an even more serious issue:

The problem with this is that the activation code is entirely predictable – it’s not a 32 character token like many websites use, and all you need to do to access other people’s personal data is change the code in the URL that the link takes you to. It would be horrifically easy for someone to abuse this and harvest personal details – names, email addresses and postcodes.

Gaz sent me evidence of the issue and like in Alex's screen cap, it clearly showed the leaking of personal data (phone number and physical address in this case):

Which is precisely what Alex had also tried to warn them about! So now here you have multiple people finding the same risk which would allow for the easy exfiltration of data en mass, and this is just the guys we know about. Who else found it and didn't let them know? (And incidentally, in light of evidence that customer data was accessed by multiple unauthorised third parties, will there be any disclosure forthcoming to their existing subscriber base?) Who else actually scraped customer data out of their system? I've got no idea and they may not have either, but what I do know is that we've seen this particular class of risk exploited multiple times before.

For example, in 2010 114k records about iPad owners were extracted from AT&T using a direct object reference risk. The following year it was First State Superannuation in Australia who were found exposing customer data in the same way and again, the "exploit" was simply incrementing numbers in the URL. Same again that year with Citigroup in the UK having an identical risk and time and time again, we see this same simple flaw popping up.

But Kids Pass didn't completely ignore the earlier tweets, in fact after Jem brought this to their attention yet again, they gave her a response:

Thanks for your comments, we are audited regularly to ensure the site is secure. This has been sent to the IT Director who will respond.

— Kids Pass (@KidsPass) July 11, 2017

Now I don't know what sort of audit misses both the plain text storage and the insecure direct object references which were clearly outlined in Gaz's post, but now here we are, weeks later and the company is blocking people trying to draw their attention to exactly the same thing.

You can explain away a single poorly handled incident, for example blocking Alex. You could even explain away blocking me too as perhaps it was the one social media bloke who was drunk or stoned and just decided to nuke both of us because he was having an off day. But it doesn't explain away ignoring Gav nor does it account for the egregiously bad error that sparked this weekend's dramas and short of any evidence to the contrary, remains unfixed. And it definitely doesn't explain how they can be "audited" yet still have a risk that can be exploited by anyone who knows how to count. Now we're looking at a pattern of behaviour and that's a lot more worrying.

Inevitably, when an organisation's behaviour puts them in the spotlight as it has now done for Kids Pass, all sorts of people are going to start paying more attention to their online things:

On a more positive note, I expect @KidsPass are getting LOTS of free vulnerability assessments today ? https://t.co/4Z1fOYpK9s

— Per Hammer (@perhammer) July 30, 2017

And like clockwork, that does indeed seem to be what's now happening:

Unfortunately, @KidsPass is a bit of a mess. Another UK business which will likely go under#SQLi #DirectObjectReference #plainTextPasswords pic.twitter.com/gk8AiNLp68

— Paul Moore (@Paul_Reviews) July 30, 2017

Right about now would be a very good time for Kids Pass to go through their services and take a very critical look at how the whole thing is put together (pro tip: don't use the same auditor, guys).

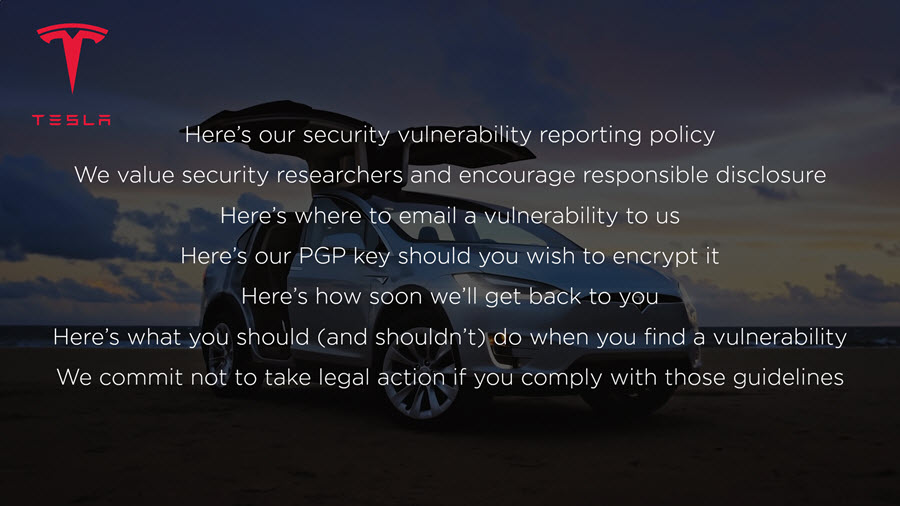

Finally, let me finish back where I began with the responsible disclosure talk I mentioned earlier, in particular the slide on Tesla's vulnerability reporting policy:

This is not hard stuff and it basically amounts to text on a page. Consider whether your own organisation has something to this effect and is actually ready to handle disclosure by those who attempt to do so ethically. Listen to these people and be thankful they exist; there's a whole bunch of others out there who are far less charitable and by the time you hear from those guys, it's already too late.