Organisations don't plan to fail. Probably the closest we get to that in the security space is password hashing, which for all intents and purposes is an acknowledgement that one day, you may well lose them. But organisations rarely plan for how they should handle data breaches and when an incident does happen (and that seems to be a near certainty these days), they're left unprepared; they're in unfamiliar territory, there's enormous stress and pressures on them and frankly, they usually react pretty badly.

I've seen a lot of examples of how organisations have dealt with incidents over the years. I've been inside the organisation, advising the organisation, often disclosing incidents to the organisation and of course like everyone else, watching how they handle incidents from the outside. I've seen enough to recognise both good and bad patterns and I'm hoping that by highlighting them here we can learn from the precedents that have been set. Ideally, this will serve as a checkpoint for those who need to communicate an incident publicly and a quick read will keep them from making many of the mistakes I'm about to outline below.

Make it easy to submit security reports

It's hard to explain just how difficult this is unless you've been through it yourself before, preferably many times with organisations of all sizes. There are degrees of difficulty, of course, but even at the best of times it's rarely a smooth process. Let's start with how bad it can be which means starting with CloudPets who had a majorly bad run the other day. Here's what we know of the disclosure timeline (largely covered in the updates at the bottom of my post):

- Oct: Paul Stone of Context Security attempts to contact CloudPets

- Dec 31: Victor Gevers attempted to alert CloudPets via their ZenDesk support

- Dec 31: My (anonymous) contact attempted to alert CloudPets via their published support address

- Dec 31: My contact attempted to alert CloudPets via the WHOIS record contact

- Jan 4: My contact attempted to alert Linode, CloudPets' hosting provider

- Feb: The reporter I worked with attempted to contact them via:

- Multiple email addresses

- Many different phone numbers

- Their development partner

Much of this happened before their database was subsequently pilfered, ransomed and eventually, deleted. They could have significantly reduced the impact of this event simply by being receptive but instead, they now find themselves here:

Haha. All the compromised #cloudpets at the 99 cent store. I like how they covered the name with a sticker hoping nobody notices. pic.twitter.com/Bb2OLeTscn

— Alex von Gluck ? (@kallisti5) March 20, 2017

So how do you make it easy for people to submit security reports? Let's take a look at Tesla's Security Vulnerability Reporting Policy and in particular, the following things they do:

- They actually have a policy!

- They have an email address and it's specifically for reporting vulnerabilities

- They publish their PGP key so people can encrypt their messages

- They make it clear what you shouldn't do when looking at their security

- They set an expectation of how quickly they'll respond

The total cost of this is about zero; there is no easier way to get people submitting bugs responsibly than by doing this. This isn't about bug bounties either (although they do run a program with Bugcrowd), it's simply about making it easy for people to do the right thing.

Treat security reports with urgency

This sounds like a ridiculously obvious thing to say, but it's something which can be enormously frustrating for those of us disclosing vulnerabilities ethically and enormously destructive when contact is coming from shadier parties. Let's look at some examples of each.

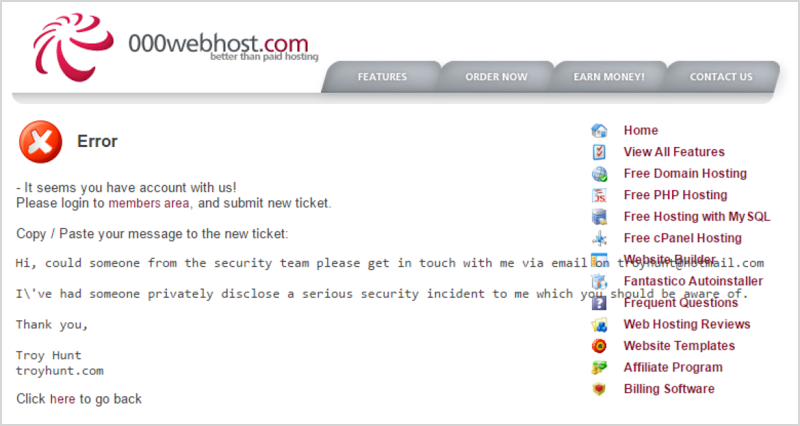

In October 2015, someone handed me 13M records from 000webhost. I tried to get in touch with them via the only means I could find:

I won't delve into all the dramas I had again here, suffice to say I tried for days to get through to these guys and their support people acknowledged my report of a serious security incident yet couldn't get me in touch with anyone who could deal with it appropriately. I eventually went to the press with the issue and the first proper response they made was after reading about how they'd been compromised in the news headlines.

This attitude of not taking reports seriously and dealing with them promptly is alarmingly common. Let's go back to CloudPets again for a moment and their CEO gave a journalist this comment:

@lorenzoFB "We did have a reporter, try to contact us multiple times last week, you don't respond to some random person about a data breach.

— Michael Kan (@Michael_Kan) February 28, 2017

Yes you do! Random people are precisely the kind you want to respond to because they're the ones that often have your data! Here's a perfect example of that:

. @PayAsUGym We have access to your server + database, please contact me via DMs for more info, ignoring us is not a solution pic.twitter.com/HjixVT3KOD

— 1x0123 (@real_1x0123) December 15, 2016

Now at this point, the individual has to absolutely, positively be taken seriously. Ignoring the tweet is definitely not a strategy and whilst engaging with someone involved in criminal activity may not seem particularly desirable, right now they hold the balance of power. In this case, PayAsUGym ignored the guy despite my attempts to get their attention and ultimately, their data was dumped publicly.

You must disclose

Once an incident is confirmed, one of the first things we need to establish is if it needs to be publicly disclosed. I'm going to refer to a bunch of resources from the EU here because their General Data Protection Regulation (GDPR) is coming into effect next year and it's a good modern incarnation of how data breaches should be handled.

There's a good succinct piece on this by Varonis which talks about whether or not the incident is "likely to affect" those caught up in it. Whilst the term sounds a bit legal-speak, they clarify it as follows:

According to the compliance attorney we spoke to, any personal data identifiers – say, email addresses, online account IDs, and possibly IP addresses — could easily pass the likely-to-affect test

That's pretty much any data breach today; it's very rare to see one without an email address. Now if that's all it was then you could argue on the one hand that "affect" may be nothing more than spam but on the other hand, it's personal information and you've lost it! Plus, you could argue that even just email address alone could be used for malicious attacks such as phishing or as we saw after the Ashley Madison incident, even ransom.

Here's an example of where this goes all wrong: last year, the Minecraft community known as Lifeboat was hacked. Roughly 7 million accounts started circulating and were subsequently passed to me. When confronted about the incident by the media, here's what Lifeboat had to say:

When this happened [in] early January we figured the best thing for our players was to quietly force a password reset without letting the hackers know they had limited time to act

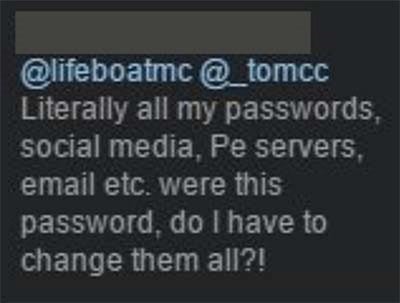

Wow! No. It's not just users' accounts on the service itself that gets put at risk, it's other services as well:

This is really essential to remember: when you have a data breach, your actions (or lack thereof) have a significant impact on many other services. No, people shouldn't reuse their passwords but we all know that's enormously common and it's a risk that can be reduced by early notification of impacted customers.

Disclose early

Specifics may differ in various other jurisdictions, but the "disclose early" sentiment should pervade across borders because quite simply, it's in the best interests of those who are impacted by the breach. Here's a good summary under the GDPR's heading of Notification of a personal data breach to the supervisory authority:

Therefore, as soon as the controller becomes aware that a personal data breach has occurred, the controller should notify the personal data breach to the supervisory authority without undue delay and, where feasible, not later than 72 hours after having become aware of it, unless the controller is able to demonstrate, in accordance with the accountability principle, that the personal data breach is unlikely to result in a risk to the rights and freedoms of natural persons

Now this is to the "supervisory authority", what about the individuals themselves? The disclosure to "natural persons" is described as follows:

Natural persons should be informed without undue delay where the personal data breach is likely to result in a high risk to the rights and freedoms of natural persons, in order to allow them to take the necessary precautions

The term "without undue delay" is a bit legal-speak again but the intention is clear and the timeline for supervisory authority is pretty clear. Failing to meet this timeline can result in serious consequences too. The ICO in the UK outlines the penalties:

Failing to notify a breach when required to do so can result in a significant fine up to 10 million Euros or 2 per cent of your global turnover

I don't want to get too caught up with the GDPR but simply put, you cannot screw around with notifications; it should be one of the very first things you start focusing on in the wake of an incident. A case like Capgemini and Michael Page where it took 11 days could result in serious consequences and let's face it - it simply doesn't take that long to send customers emails, even when it's millions of them. Same again with Adult Friend Finder who took a week to disclose; delays like this are more reflective of lawyer and PR involvement than they are of genuine concern for customers.

Unfortunately, lengthy delays like this are not unusual:

#PageGroup thank you for letting me know that my details were stolen after 11 DAYS!! I'm certain that who did it had no malicious intent!!!

— Catalina Pascu (@CatalinaPascu) November 11, 2016

It's not just unacceptable in the "this really isn't cool" way, it's also unacceptable under the incoming GDPR legislation, in fact it's more than 3 times the required maximum notification time.

When an incident such as a data breach occurs, people inevitably look for more information. They hear a snippet from friends or read a piece on the news and they're curious. Making factual information readily accessible via a formal channel is enormously important as it allows the organisation to put their message - and the facts - forward to the public.

Going back to PayAsUGym for a moment, this is where they really got it wrong. There was no communication on their Twitter account, nothing on their Facebook and no messaging on their website (i.e. not a notice on the front page, no news or blog section). However, they were still broadcasting promotional messages via social media which is the digital equivalent of sticking your fingers in your ears and saying "la-la-la-la-la-la-la-la".

Compare that to Ethereum who had their forum hacked a few days later. They were quick to write a blog about exactly what happened and socialise it via Twitter:

Security Alert: Ethereum forums database leak. See blog for details https://t.co/E14ObMfF4v

— Ethereum (@ethereumproject) December 19, 2016

As well as on Facebook:

What this allowed them to do was to make their version of events the canonical one. That's the reference the media then referred to, their stated positions were the ones that made headlines and there was much less left to interpretation. When an organisation doesn't have a public position on a data breach, it leaves things open for speculation and often results in people forming incorrect conclusions. Emailing customers is not enough because there will always be other people forming opinions (i.e the social media peanut gallery) and it's important to influence their views on the incident too.

Protect accounts immediately

Clearly, once a database of user credentials has been exposed, those users are at risk of having their accounts accessed. It's normal operating procedure to force password resets on impacted accounts and that's something that needs to happen as a matter of priority, ideally in combination with the disclosure notice which is sent to impacted parties.

On December 17, Bleacher Report notified customers of a breach. Within their notification, they talk about password resets:

If you do not change your password within 72 hours, your existing password will cease to work and you will have to click the “Forgot Password?” link in order to reset your password.

This is both reckless and unnecessary, even more so because they'd actually identified the incident more than a month earlier. There's also something to be said here about the timeliness of disclosure, but clearly the longer they leave stolen credentials active then the longer they can be exploited.

Avoid misdirection and false or misleading statements

This might sound like the most ridiculously obvious thing to say, but don't lie when disclosing an incident. I know the truth may hurt, but the harsh reality of most data breaches is that there's been a failure at some point and now you need to own that.

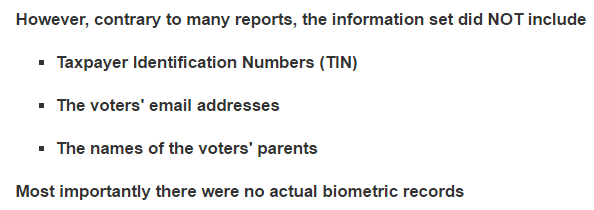

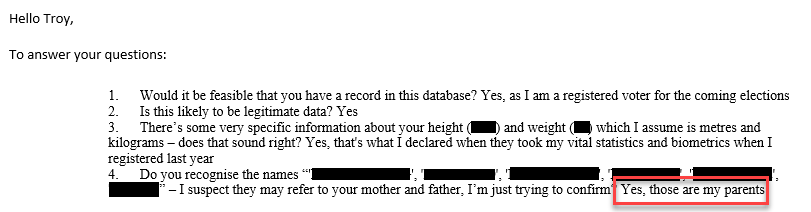

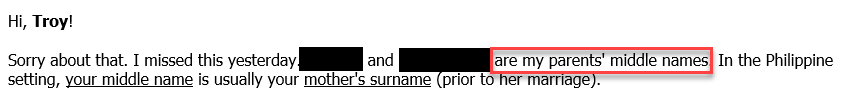

Case in point: in April 2016, the Philippines election committee known as COMELEC was breached. In that blog post I wrote about how in verifying the legitimacy of the incident with HIBP subscribers, many of the personal attributes in the incident were confirmed, including the names of voters' parents. Fast forward to June and they released a statement which included the following:

This was a blatant lie. Not a misinterpretation, not a question of degrees or shades of grey, but an outright lie. There were over 228k email addresses in that breach and I contacted a small number of them who were subscribed to HIBP and received responses like this:

This is not open to interpretation: Filipino citizens had their email addresses breached, I emailed some of them and they identified their parents in the incident. There's also no denying the presence of fields denoting biometric data, although the practical application of it is not immediately clear. Regardless, the email addresses and parents' details were present.

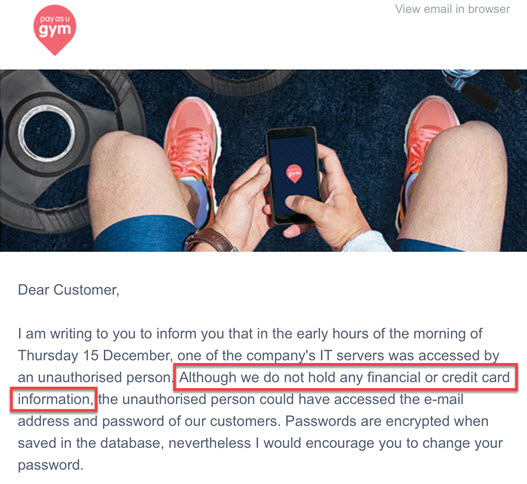

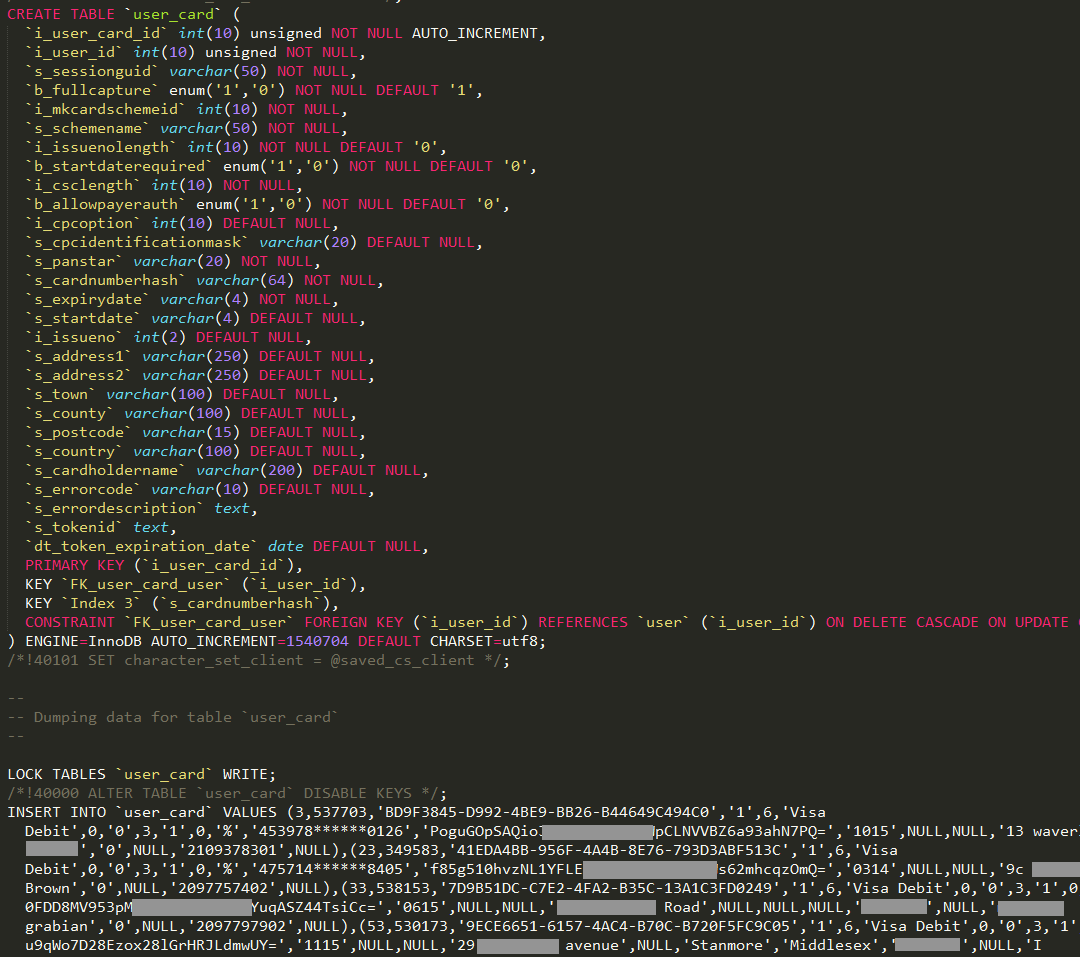

Same deal again with PayAsUGym who I mentioned earlier. They issued a statement as follows after their incident:

The highlighted point is significant as compromised card data can trigger unwanted attention from PCI which can have pretty serious consequences. Unfortunately though, the statement was false and one look at the data which was widely circulated after the incident tells the story:

In this particular case, PayAsUGym later had to make the very embarrassing admission that actually, yes, credit card data had been compromised. (Incidentally, this is also something they would have known had they been following discussion about this in public forums explicitly showing exposed card data!)

There will always be a temptation to downplay an incident like this and misdirection or misleading statements (let alone outright lies) are not going to do the organisation any favours and pose a real risk of backfiring spectacularly if they're later held to account.

Don't be vague

Closely related to the previous point, it's really important to avoid statements which can be misleading, unclear or otherwise simply just not contribute to the objectives of contacting customers in the first place. For example, this one from JobStreet.com:

@troyhunt another day, another breach... @JobStreetSG pic.twitter.com/BlU7bTBl9I

— KristoferA ? (@KristoferA) December 29, 2016

I've read this over and over again and I'm still confused; was the data on the Malaysian forum actually legitimate? They refer to "claim" as though it's not substantiated but then say you probably should reset your password... but there's no evidence to suggest you should! You can see how confusing this can be and per the earlier comment above on the Lifeboat incident, remember that people who've reused their passwords across services will be wondering what this actually means to their broader risk profile.

Here's a good example of a much clearer and more transparent disclosure courtesy of Soundwave:

Awesome looks like @soundwave used Production data in test and left it there after being acquired by @Spotify

— Torvos (@torvos) March 17, 2017

/cc @troyhunt pic.twitter.com/sfHBy5jMXL

Look at how much was clearly spelled out:

- They put production data in a test database

- They hashed the passwords with MD5

- They list all the impacted data attributes

Now let's be clear - production data in a test database with passwords stored as MD5 is not a pleasant story to tell - but it was the right story to tell under the circumstances. They've been clear and transparent and that's enormously important.

Explain what actually happened

Here's a good example of where lack of information can lead to speculation and misunderstanding. When I wrote about the leak of Michael Page data, I included this image that was sent to me when it was first reported:

If you were to look at that image, you would reasonably assume that data for countries such as Australia was exposed - there is literally a file called "mp_australia.sql.gz"! That then lead to headlines such as The private information of Australian jobseekers has been exposed which would be a perfectly reasonable assumption. However, Michael Page's media statement listed only 3 countries as having been impacted - China, the Netherlands and the UK.

Now I have no insider knowledge on this so I'm just going to speculate, but I suspect the gap here is that they concluded no other files had been downloaded. This would have been so easy to explain and would immediately dispel speculation about why their statement appeared to come up short. It wouldn't emphatically mean none of the data was accessed; we're talking about a badly misconfigured server and making some assumptions about what was successfully logged when and what levels of access were available (logs are frequently tampered with to hide tracks), but you'd get a much better sense of transparency if the situation was simply explained in this fashion.

Compare that to the Mountain Training breach last year (and do read the detailed notification):

This is a really well written breach notice from @MtnTraining, here's the whole thing: https://t.co/5jaw6VYjTm pic.twitter.com/u87t9qqDmP

— Troy Hunt (@troyhunt) November 23, 2016

This talks about how everything unfolded with a great degree of transparency. When you read this, you can't help but feel they're being open and honest about the situation; it doesn't feel like they're covering anything up or misleading people. This isn't hard to do, it doesn't "jeopardise ongoing investigations" and demonstrates both an understanding of what went wrong and a willingness to communicate truthfully with customers.

Keep customers updated

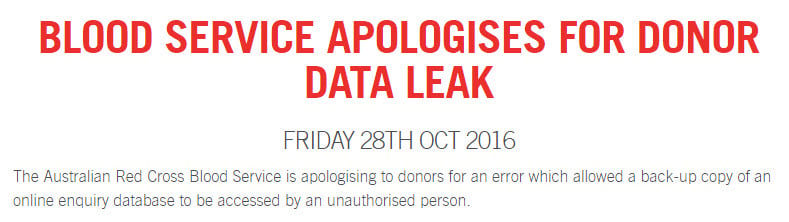

One thing I really liked about the Red Cross' handling of their incident was the follow-up. A couple of weeks after initial disclosure, the CEO sent a message that began like this:

I am writing to you with an update on the data security issue we last contacted you about at the end of October

She went on to talk about the outcome of investigations they'd since done, talked about further strengthening their processes and then apologised for the incident:

I want to say again how truly sorry we are that this happened

When initial communication with customers is done promptly, the impacted organisation rarely has all the answers upfront. For example, per the Red Cross above, there are often investigations that are ongoing. It can take time to join the dots and work out what actually happened. This example from Plex illustrates that - take a note of the timelines:

- July 2: They write about learning of an intrusion which occurred at 1pm the previous day (nice and prompt!)

- Later that same day: They add an FAQ to address specific questions they were seeing from customers

- July 6: They update the post again and advise "the attackers gained entry via exploiting bugs in the forums software"

The point is that they kept people informed as they learned of new information. They didn't wait for ages before they knew everything (we already know why that would be problematic), but they instead added more information and shared what they knew when they could.

Follow-up with customers serves another valuable purpose as well, and that's to explain how you're going to avoid this happening again. Data breaches occur because somewhere, someone screwed up. Not only did they screw up, but the organisation failed to catch that screw up before it resulted in something going very badly wrong. In order to regain trust, you need to convince customers that whatever root cause was behind that failure has now been addressed. If you can't do that, how are you going to regain their trust?

Apologise

This can sound a little trite, but being apologetic for having lost customer data can be a powerful tool in winning back public sentiment. And per the previous paragraph above, there's always something to apologise for.

Now take the CloudPets breach notice which was (eventually) sent out. They begin by saying:

We recently discovered that unauthorized third parties illegally gained access to our CloudPets server

They use words like "unauthorized" and "illegally" to shift blame and their communication is littered with what effectively boil down to excuses. For example, they talked about the prevalence of MongoDBs being compromised but neglected to mention that only occurred when the owner didn't put a password on it! This is clearly a major cock up on their behalf but at no stage did they apologise for losing valuable customer data (including exposing voice recordings of people's children). The word "sorry" simply never appears. Compare that to how the Australian Red Cross Blood Service responded after losing a large amount of customer data (including mine and my wife's):

The title of their disclosure literally has the word "apologises" in it! In this case, it was actually a contractor of theirs that lost the data (and they were very sorry too), but the Red Cross "owned" the incident and took accountability for it.

Whilst writing this post I had someone contact me with a data exposure story of their own. He uses an online accounting service and his account manager had inadvertently sent him personal information about someone else; it was a basic human error scenario. He let them know and went to bed angry, convinced he'd now need to move to another account given their sloppy handling of personal info. And then he woke up to this:

First thing this morning, I had an apology email from my account manager, followed up by a phone call from her boss detailing the very impressive list of things they're doing as a consequence. They'd already contacted the Information Commissioner's Office, and their data protection officer was in the process of compiling a full report on it for submission to the ICO. All their processes will be reviewed. The other client had been contacted and his credentials changed, and they also asked me to delete any copies of the email with the details in. Finally the whole team will be given additional training on the subject of data protection.

This is such a simple thing - such an easy thing - and although this was a small-scale incident ultimately only involving two parties, carefully worded apologies can work well anywhere. In this case, just by simply acknowledging that they'd screwed up and demonstrating that they'd taken quite reasonable steps to prevent it happening again in the future, they retained a customer. How easy is that?!

Summary

Data breaches are never a pleasant thing to deal with either as an individual victim or as the company that lost the data. Add to that the various regulations in different jurisdictions and the potential legal ramifications for the company involved and the whole thing starts to get very messy. That's before the PR people even step in to put their spin on things.

But there are very reasonable expectations that we can align around as an industry. Largely, it boils down to letting all impacted parties know within a few days and giving them honest facts whilst doing your best to protect them from further risk. Frankly, that's just basic decency and it doesn't matter where in the world the company operates or what industry they're in or how important they think their data is, it's just the right thing to do and it's dead easy too!