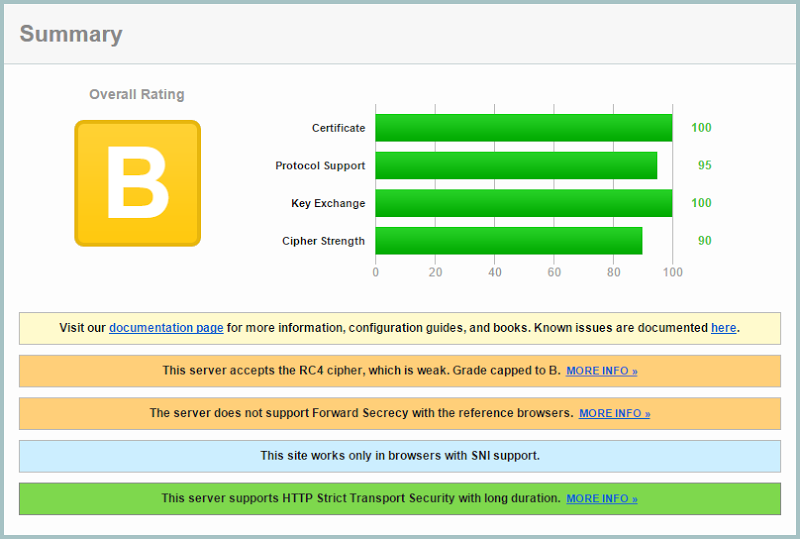

I’ve often received feedback from people about this SSL Labs test of Have I been pwned? (HIBP):

Just recently I had an email effectively saying “drop this cipher, do that other thing, you’re insecure kthanksbye”. Precisely what this individual thought an attacker was going to do with an entirely public site wasn’t quite clear (and I will come back to this later on), but regardless, if I’m going to have SSL then clearly I want good SSL and this report bugged me.

A couple of months ago I wrote about how It’s time for A grade SSL on Azure websites which talked about how Microsoft’s SSL termination in front of their web app service (this is their PaaS cloud offering for websites) was the reason for the rating. Customers can’t control this, it’s part of the service. But as I also said in that blog post, the aspects of their implementation which were keeping the rating above at a B (namely ongoing RC4 support), were shortly going to be remediated. It’s now happened so let’s take a look at what this means.

A grade SSL

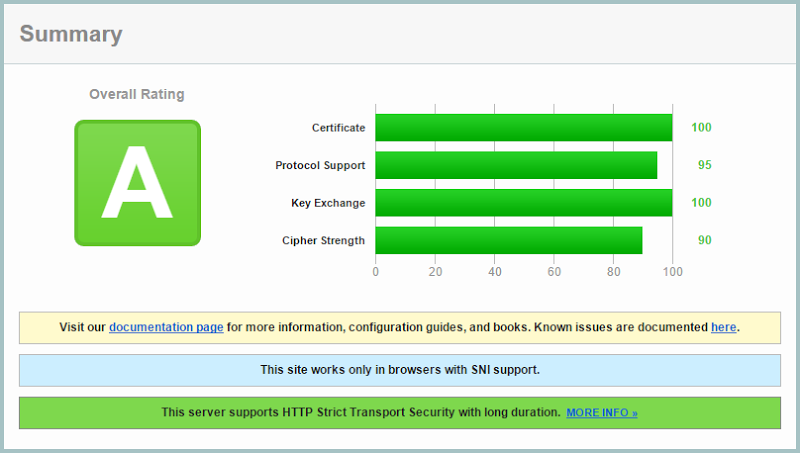

Before we jump into the new state of Azure SSL, it’s worth a quick look at the Ars Technica piece on the growing practicality of attacks against SSL implementations using RC4. Particularly take note of the video on The RC4 NOMORE Attack which demonstrates successful auth cookie decryption of SSL traffic due to the weaknesses in the cipher. Clearly, it’s time to move on and that’s just what Microsoft has done. Here’s my SSL Labs report for HIBP now:

Hoorah – “A” grade! Now let’s talk about why this may well have not been a simple thing for Microsoft.

Because dropping support has real world consequences

Something I’m continually seeing that frankly worries me a bit is people taking absolute positions on security in the absence of objective thinking. For example, “If it’s not perfect SSL it’s useless” or in the case of my recent CloudFlare post, “If you’re not encrypting to the origin then you’re better off having no SSL”. This “chucking the baby out with the bathwater” attitude can be really damaging if conclusions aren’t drawn from a holistic view of the situation that also considers relative risk, impact of exploit and of course, cost. I say this because for Microsoft, it was never a simple position of “oh we’ll just remove the RC4 ciphers”, there’s a real world impact in doing that and I want to illustrate it here.

Last year we had the whole POODLE debacle which was the final nail in the coffin for SSL 3. Clearly it had to go and different providers started killing it off at different rates. Microsoft gave a few weeks’ notice of it being removed so there was sufficient lead time for customers to test their things and take appropriate action if there were compatibility problems. But hey, nobody should still have a dependency on SSL 3 in 2014, right? I mean maybe clients would fallback to it if necessary (and indeed that was a behaviour exploited by POODLE), but we’ve had TLS since ‘99 so a decade and a half on all clients will support that and won’t miss SSL at all, right?

I was involved in a project run by vendors managing vendors (that should always be the first clue you’re in for trouble), which saw hundreds of POS terminals in a major international city talking to web services on the Azure PaaS offering. This project was rolled out in mid-2014, only a couple of months before POODLE hit. When news of Microsoft disabling SSL 3 hit, I reached out to them: “I know it’s a silly question to ask in 2014, but all those POS terminals can talk TLS right? Keep in mind that Microsoft is about to disable SSL 3 which of course nobody should have been dependent on anyway.” The vendor said they’d have a meeting about it…

Of course the next I heard about it was when alarm bells started going off as suddenly customers in stores couldn’t process transactions. “Can Microsoft please turn SSL 3 back on”. Uh, no, it doesn’t work that way. You made a very bad decision about SSL support (actually, it was probably never even consciously thought about) and there is no way Microsoft is now going to turn around and put hundreds of thousands of other sites at risk because of your lack of foresight! It would take a firmware update after the vendor actually built the TLS compatibility to rectify the situation so for some time, everything remained broken.

I wanted to share this story to put decisions around dropping support for technology in a way that breaks existing dependencies in context. Removing RC4 support will almost certainly break some things – probably obscure things – but break them nonetheless. Looking at this objectively, Microsoft has to manage that in a way to both minimise impact on that small number of dependent customers but also improve the security position for everyone else. Now I’m not saying that they shouldn’t have made this change earlier (in fact I think they should have), but I am saying that it’s not a simple decision and there’s a bunch of stuff they had to weigh up in order to make it.

Let me add one more thing to my earlier story about the POS terminals as frankly, I’m still recovering from the pain and talking about it helps; what would you do if the Azure PaaS service the terminals were dependent on no longer supported SSL 3 but you still needed it for the clients to run? Easy, you just stand up an IaaS instance and configure IIS to support SSL 3 then update DNS to point over there instead (it was a very small web service easily migrated). Sounds like a good idea, right? So that was done very promptly after I which I urged people to check the IIS logs and ensure traffic was appearing… except it wasn’t. DNS was good, nameservers had the right records, the service was running, what would cause this? In an act of what I can only describe as gross incompetence, the POS terminals had all been configured not to point to the domain name for the service, but rather to the Azure temporary domain on azurewebsites.net. And there’s no remote update on the terminals, every single one would take a store visit. So the vendor asked if Microsoft could kindly update azurewebsites.net to point to their IIS instance instead… My therapy is ongoing.

Let us also exercise some common sense here…

In the intro of this post I talked about the absolutism that some people seem to suffer from in assessing the security position of applications. HIBP is a perfect example of where RC4 support really didn’t pose a risk of any consequence; exactly what is an attacker going to do if they manage to observe or manipulate traffic on the wire? See your email address when you search for it, the thing you send around in the clear via SMTP every day anyway? And also put this in the context of the effort required to compromise the RC4 cipher’d traffic – all 52 hours of it.

Take a step back from an issue like this and apply some common sense; an attacker who has inserted themselves in the middle of the comms needs to invest a not-insignificant amount of effort to extract anything useful and for what ROI? What’s the upside for them? That’s a genuinely valid question to ask when assessing security controls and when you factor in ease, likelihood and impact then assess that against the cost of, say, standing up your own IaaS instance and managing the whole thing yourself just to get rid of RC4, there are classes of app where that simply doesn’t make sense.

Now by no means do I want to imply that strong SSL doesn’t matter and your default position should always be to max out the security controls at your disposal, but I’m saying that when cost is also a factor (and effort has a cost too), then the security controls need to be commensurate to the value they provide.

I don’t want to harp on that any further, I’d just like to see more balance and objectivity in these discussions. Fortunately, like SSL 3, the RC4 discussion is now behind us when it comes to Azure web apps and we can proceed in a much more secure by default position… until the next thing goes wrong with SSL!