Last month I wrote about our password hashing having no clothes which, to cut to the chase, demonstrated how salted SHA hashes (such as created by the ASP.NET membership provider), offered next to no protection from brute force attacks. I’m going to assume you’re familiar with the background story on this (read that article before this one if not), but the bottom line was that cryptographic hashing of passwords needs to be way slower. Not half the speed or even one tenth of the speed, it needs to be thousands of times slower.

The conclusion of the post was frankly, a little unsatisfying. Why? Because it essentially said “If you take my favourite technology stack and use the default implementation to store passwords, it’s insecure”. Yes, I suggested alternative approaches but these didn’t work natively with the membership provider or required machine.config access so they really weren’t conducive to today’s world of getting an app up into the cloud in 5 minutes.

But it turns out that we’re on the cusp of solving this for ASP.NET and you can access a better solution right now. In fact you may even be using it already and just don’t know it because until now, it really hasn’t been publicised.

Project templates are dead – long live project templates!

A lot of this has to do with project templates and the best way to illustrate what’s changing is to take a look at a web project in Visual Studio 2010 and compare it to one in 2012.

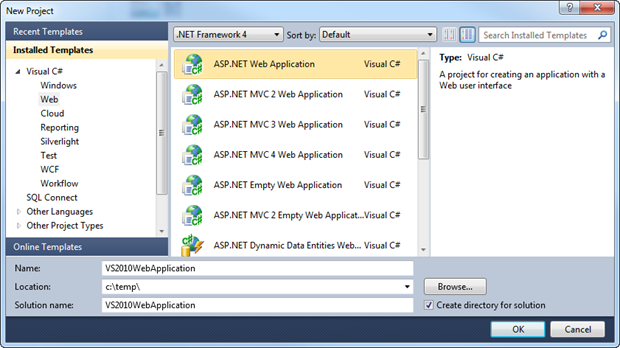

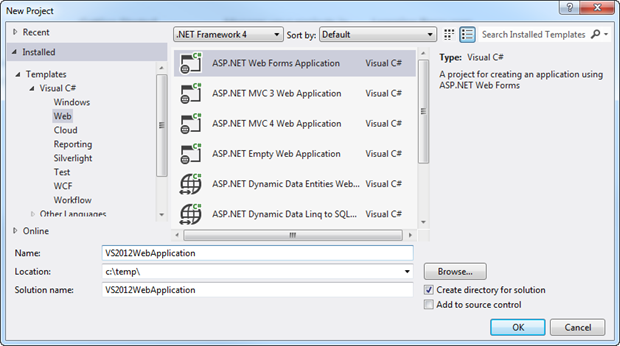

Let’s start with a .NET 4 ASP.NET Web Application in the outgoing version:

Argh – the colours! Moving right along, now we’ll do exactly the exact same thing in Visual Studio 2012. Note that this is still targeting .NET 4 so in theory, we’re getting the same thing:

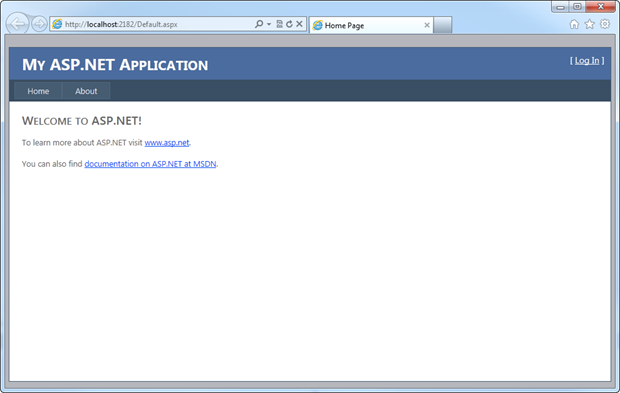

Except they’re not the same. Here’s what we got in 2010 when the app was run:

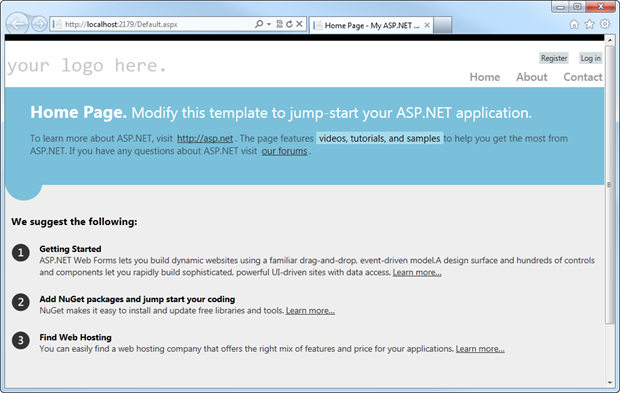

And here’s what we get in 2012:

This will not be news to many of you; much has already been discussed about the improvements to the new templates. They’re more mobile friendly, you get Modernizr and jQuery UI built in plus all the add-ons are now NuGet packages so they’re easy to update. There are many, many good reasons to use the new templates, but let me show you one that hasn’t been talked about very much.

Let’s take a look at the membership provider configuration in each of these projects, firstly in 2010:

<add name="AspNetSqlMembershipProvider"

type="System.Web.Security.SqlMembershipProvider"

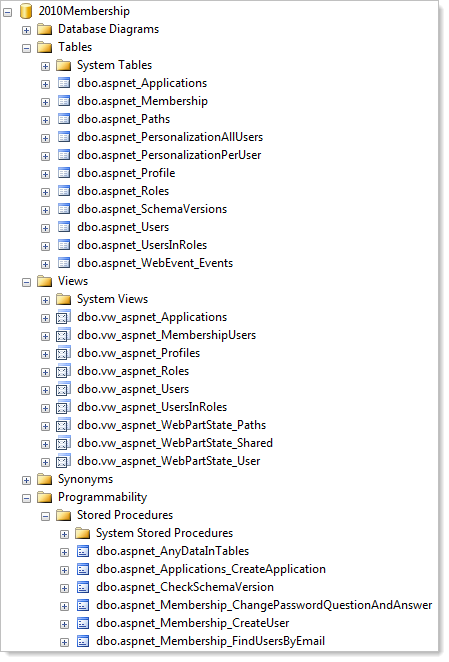

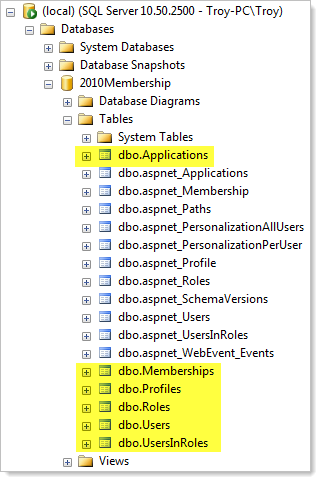

The SQL Membership Provider is the guy we used to apply on top of SQL Server (obviously) after running aspnet_regsql to create all the appropriate DB objects. I did a 5 minute wonder screencast on this last year and for the most part, this approach worked ok. It meant our DB layer ended up looking like this:

For the sake of brevity I’ll spare you from all the stored procedures (there are 55 of them), schemas (13) and roles (another 13). How can I put this nicely… it made the database a bloody mess. It’s not just the volume of objects, it’s the “aspnet” prefixes and dependencies on the bygone era of stored procedures (yes, yes, I know some people will argue with me vehemently about this) that made the DB feel like a bit of a dumping ground.

Let’s not dwell on that though, here’s what you’ll find in 2012:

<add name="DefaultMembershipProvider" type="System.Web.Providers.DefaultMembershipProvider, System.Web.Providers,

Version=1.0.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35"

Scott Hanselman wrote about System.Web.Providers last year in relation to a NuGet package which I’ll come back to a bit later. For now though, let’s take a bit of a look at the magic behind this. Firstly, there’s no more aspnet_regsql, you just make sure your connection string is set and the account has DBO rights (don’t worry, it doesn’t have to stay this way) then run the app up and attempt to perform any action which should cause the membership provider to hit the DB (i.e. log on – it doesn't matter that there isn't an account). Now let’s take a look at the object that have been created:

That’s it. No views, no stored procedures, no roles, no schemas and not even any “aspnet” prefixes. This is the way normal people build databases! Ok, we can debate the pluralisation but other than that, it’s a reasonable DB structure.

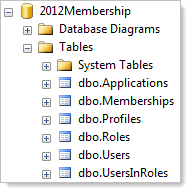

Let’s consider the changes from the old SQL membership provider, namely in the table which stores the membership data (old on the left, new on the right):

They’re very similar and frankly the omissions aren’t going to be missed by anyone (a dedicated column for lowercase email – really?!). But it’s what’s stored in the password field of this table which is the pièce de résistance…

Say hello to PBKDF2

Let me run each app up and create a single registration with the password “password” (please people, this is an example only!):

Ok, what’s going on here? Why has the hash in the 2012 template blown out? Hashing algorithms always create the same cipher length regardless of the input string; it must mean that something different is going on. And there is – what you’re seeing here is the result of the universal membership provider which is now calling directly into the Crypto.HashPassword method that we’ve had in System.Web.Helpers for a little while now.

One of the great things about significant portions of ASP.NET now being open sourced is that it’s easier than ever to take a look under the covers. For those that want to see what’s going on, the entire crypto implementation is over on CodePlex. Most importantly for this post, the comments make it very easy to understand what’s happening:

/* ======================= * HASHED PASSWORD FORMATS * ======================= * * Version 0: * PBKDF2 with HMAC-SHA1, 128-bit salt, 256-bit subkey, 1000 iterations. * (See also: SDL crypto guidelines v5.1, Part III) * Format: { 0x00, salt, subkey } */

And there it is – the membership provider is implementing 1,000 iterations of SHA1 so from a brute force perspective, this imposes a significant workload increase over the default implementation in the old SQL membership provider.

In that previous hashing weakness post I wrote, it took 44 mins and 56 seconds to crack 63% of common passwords in a sample size of nearly 40,000. Had that sample used the new universal membership provider, that time would have blown out to more than a month – 31.2 days, to be exact. Where I previously used the paradigm of sitting through a couple of episodes of The Family Guy, this is like watching The Phantom Menace back to back 330 times. Clearly this makes for a painful experience that will deter many a determined hacker.

Now of course this was never about making passwords uncrackable and as with most aspects of software security it’s about raising the degree of difficulty to a level which makes it more infeasible for a greater numbers of people. Will the raised bar make it unlikely that hacktivists will crack passwords from a run of the mill website? Yes, quite likely. But will it stop the US government from cracking passwords on a website facilitating terrorist activity? Don’t bet on that.

The impact on performance

So what does this do to performance? How long does a logon now take? And will the prophecy of unacceptably high CPU utilisation be true? Let’s take a look.

What I’m going to do is log on to the system 1,000 times and see how long it takes with the old provider and then with the new. Here’s what’s happening:

var sw = new Stopwatch(); sw.Start(); for (var i = 0; i < 1000; i++) { Membership.ValidateUser("troyhunt", "password"); } sw.Stop(); passwordDuration.Text = sw.ElapsedMilliseconds.ToString("n0");

Now keep in mind that you can’t really trust the Stopwatch class, at least not with any great degree of accuracy, but this is good for indicative durations. I ran the above script with both membership providers a handful times in IIS Express and averaged it out (incidentally, the results were very similar when running directly under IIS). Here’s what I got:

There are a couple of interesting things to note here:

- The new provider is slower, but not 1,000 times slower. This is because the hash process itself is only one small part of logging on. On top of this there is also the handling of ADO.NET connections, execution of queries (more on that shortly) and other processing overhead beyond just generating a hash.

- From a user perspective, this is in no way an unacceptably long duration for a logon. As per the previous point, there’s a bunch of stuff that happens when you logon but the bottom line is that the entire process is taking about 100ms, which for a once-per-session activity, is just fine.

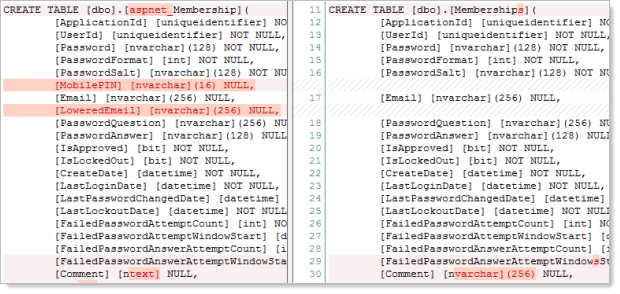

But what about the impact on the CPU? I mean by design these hashing algorithms are meant to make it work pretty hard, is it going to start to cause nasty spikes in utilisation effectively DoS’ing the app? Here’s what I observed in the 1,000 sequential runs:

This is using about 7.5% of the CPU for consistent, back to back hashing. Now of course this is just happening in a single thread and the story will start to change pretty quickly with multiple simultaneous executions. Be that as it may, we still logged 1,000 people in across about a minute and a half so I’ll stick my assertion from the previous post that popularity of that level is a problem I’d love to have! Do indeed be conscious of the additional overhead, but you’re unlikely to have it cause issues unless you’re dealing with serious scale.

So talking about execution of queries, remember how the old provider created all those stored procedures (amongst other DB objects)? Here’s what used to happen when you logged on:

![]()

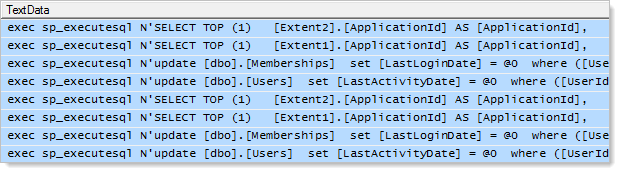

One stored procedure. That’s it. Moving forward into the universal provider, here’s what’s going on at the SQL end for a single logon:

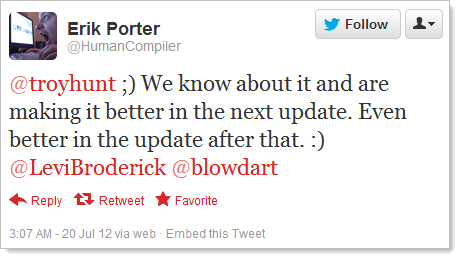

DBAs – put down your pitchforks! This is from the RC of Visual Studio 2012 using version 1.0 of the universal providers and fortunately the excessive queries are something that Microsoft knows about:

Ok, it’s excessive but at least the guys are on the case and clearly they’re rectifying things. Hopefully they’ll be on top of this before VS2012 goes live and people start creating more dependencies on it. Fortunately, because the new templates associate the library to NuGet, when an update is available it’s going to be a very simple update process indeed.

For interest sake, the current implementation is performing two identical sets of four queries which look like this:

- Select the user record and pretty much every column on it using the username provided at login

- Select a trimmed down user record by the user ID from the previous query (not sure why a second query is needed…)

- Update the LastLoginDate on the Memberships table

- Update the LastActivityDate on the Users table

The second query seems a bit redundant and then repeating all four seems very redundant so hopefully the improvements that Erik alluded to in his tweet bring this down to a much more DB friendly three queries.

BTW – this is also a good example of where profiling DB queries is really important, particularly when there are abstractions between the code and the query such as the providers or an ORM. Fire up SQL Profiler and make sure you know what’s going on.

Better hashing for young and old

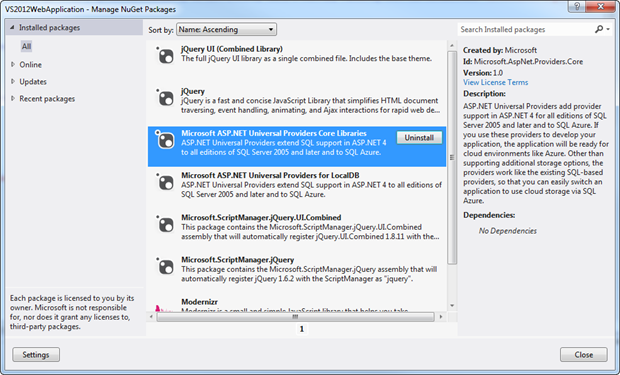

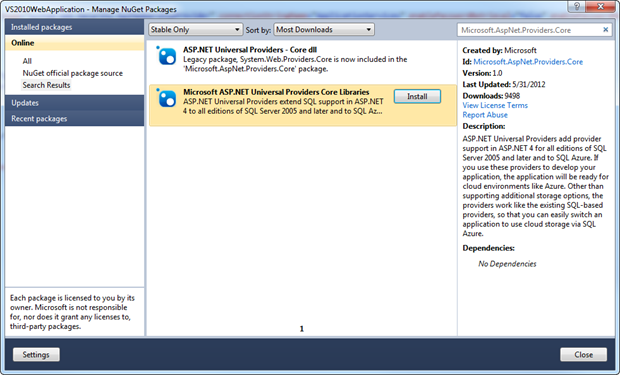

One of the great things about the templates that come packed into Visual Studio 2012 is an increasing dependency on NuGet. This is a very conscious move by Microsoft – less dependency on core framework components that only get updated every few years and more dependency on packages which can be updated and pushed independently.

When we take a look inside the packages already installed in the VS2012 template, here’s what’s inside:

The good news is that we can now just jump back on over to VS2010 and pull those same packages in:

Or for the console ninjas:

PM> Install-Package Microsoft.AspNet.Providers.Core

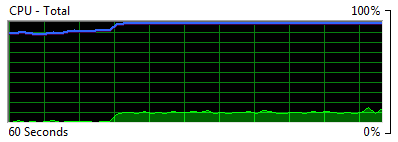

Now all we do is jump into the web.config and change the membership provider type from the old SqlMembershipProvider to the new DefaultMembershipProvider (just the values shown earlier plus a similar transformation for the role provider if you’re using it), hit the trusty F5 button then try to log on. Now let’s look at the database:

All the highlighted tables are the new ones, exactly as we saw earlier on when starting out with the 2012 template from scratch. So that’s it – today’s hashing (tomorrow’s if you consider we’re still in RC) is easily achievable in VS2010.

Hashes ain’t hashes

Updating a 2010 project template is easy – and potentially catastrophic. The problem is simply that if you have an existing project with existing hashes, following the advice above will fundamentally break your authentication. Even if you migrate all the accounts from the old tables to the new (which is easy enough), the stored hashes from the old days will not match the new hashes from the PBKDF2 model. Nobody will be able to logon.

Now of course there are ways you can code your way out of this, you just need to know which hashing algorithm was used for each user. If you know this you can always authenticate them with the old algorithm then once verified, hash the password with the new algorithm. But the membership provider doesn’t give you this facility; you’ll need to start pulling it apart and implementing your own logic.

The other option is that you could simply roll over to the new model then ask existing users to reset their passwords. You do have a secure password reset feature, don’t you? Anyway, this is not going to be feasible in many cases but if there’s a small group of users who you’re able to coerce into performing a slightly inconvenient process, that’s an easy way out.

If you do want to roll over an existing system, here’s what you need for the membership and role providers (this doesn’t cover the profile provider):

INSERT INTO dbo.Applications SELECT ApplicationName, ApplicationId,

Description FROM dbo.aspnet_Applications GO INSERT INTO dbo.Roles SELECT ApplicationId, RoleId, RoleName, Description

FROM dbo.aspnet_Roles GO INSERT INTO dbo.Users SELECT ApplicationId, UserId, UserName, IsAnonymous,

LastActivityDate FROM dbo.aspnet_Users GO INSERT INTO dbo.Memberships SELECT ApplicationId, UserId, Password,

PasswordFormat, PasswordSalt, Email, PasswordQuestion, PasswordAnswer,

IsApproved, IsLockedOut, CreateDate, LastLoginDate, LastPasswordChangedDate,

LastLockoutDate, FailedPasswordAttemptCount,

FailedPasswordAttemptWindowStart, FailedPasswordAnswerAttemptCount,

FailedPasswordAnswerAttemptWindowStart, Comment FROM dbo.aspnet_Membership GO INSERT INTO dbo.UsersInRoles SELECT * FROM dbo.aspnet_UsersInRoles GO

But once again, remember, nobody will be able to log on until they perform a password reset.

A non-native alternative

In the previous post I gave a quick mention to Zetetic’s library which integrates with the membership provider and supports bcrypt and PBKDF2. I liked the ease of pulling it from NuGet but lamented the fact that it required GAC installation and machine.config modification which ruled it out of running on modern PaaS style implementations like I’m using at AppHarbor or you’ll find in Azure.

Fortunately the folks over at Zetetic have managed to bundle all that machine-level dependency up into a pretty quick fix; in fact you can now use their library to implement Secure Password Hashing for ASP.NET in One Line. That one line simply adds the Zetetic implementation to the app’s algorithm mappings in the Application_Start event of the Global.asax.cs:

CryptoConfig.AddAlgorithm(

typeof(Zetetic.Security.Pbkdf2Hash), "pbkdf2_local");

One way that Zetetic’s implementation differs from that in the web helper from Microsoft is that rather than 1,000 iterations of the hash, we’re looking at 5,000. From a brute force perspective we’re literally looking at five times the workload. Whilst there’s possibly a usability and infrastructure overhead implication to this, it certainly improves the security.

Actually, I did specifically test the duration of the HashPassword method in the web helpers and consistently got results of around 17ms. I also specifically tested timing for a straight SHA1 hash and saw results ranging from 1/1000th to 1/3000th of what HashPassword is doing it in which is within the range of what you’d expect (remember that Stopwatch class trustworthiness thing, particularly for very small timings). For password hashing, I’d happily take a five-fold increase on what HashPassword is doing it in; somewhere closer to 100ms would be preferable for most cases in web apps and even though GPUs will churn through them much faster it just puts cracking them that much further out of reach without imposing what I’d consider to be an unacceptably high latency to logging on.

Conclusions

In both the universal membership provider and Zetetic’s library, surely the perfect world would be to take advantage of the ability to customise the hash workload? As I discussed in the previous post, everyone needs to find their own sweet spot between positively impacting the security and adversely impacting the performance. Zetetic mentions possibly encapsulating this within another library and we’re yet to hear anything from Microsoft on their implementation (yes, I did ask around).

But regardless of fine tuning the workload, the fact remains that from a security perspective the new universal membership provider gives us a one thousand-fold improvement over the old. In anyone’s book, this is a very good thing indeed.

On the downside though, in reality you’re only going to use this in new apps and anything you’ve already built with the original provider remains, quite frankly, little better than if no cryptographic storage existed at all. Then there are those eight SQL queries; not good, but a known issue and being fixed after which they can be pulled down from NuGet with very little effort at all (don’t forget to watch for this if you’re already using the new providers).

Personally, I’m giving it a thumbs up, so much so that I’m immediately pulling the old model out of ASafaWeb, migrating the accounts then asking folks to perform a password reset. I have the luxury of a small audience of private beta testers who use the existing account features and I can afford to put them through a little bit of inconvenience. Doing this now before gathering larger numbers of user accounts in the app (something I’ll write about very soon), is a smart investment IMHO.

So in conclusion, it’s a good thing. Go and get the universal provider and if it’s too hard to move from the existing membership model, pray to however will listen that your hashes don’t get disclosed because there’s very little protecting them if they do!