If you’re anything like me (and if you’re reading this, you probably are), your browser looks a little like this right now:

A bunch of different sites all presently authenticated to and sitting idly by waiting for your next HTTP instruction to update your status, accept your credit card or email your friends. And then there’s all those sites which, by virtue of the ubiquitous “remember me” checkbox, don’t appear open in any browser sessions yet remain willing and able to receive instruction on your behalf.

Now, remember also that HTTP is a stateless protocol and that requests to these sites could originate without any particular sequence from any location and assuming they’re correctly formed, be processed without the application being any the wiser. What could possibly go wrong?!

Defining Cross-Site Request Forgery

CSRF is the practice of tricking the user into inadvertently issuing an HTTP request to one of these sites without their knowledge, usually with malicious intent. This attack pattern is known as the confused deputy problem as it’s fooling the user into misusing their authority. From the OWASP definition:

A CSRF attack forces a logged-on victim’s browser to send a forged HTTP request, including the victim’s session cookie and any other automatically included authentication information, to a vulnerable web application. This allows the attacker to force the victim’s browser to generate requests the vulnerable application thinks are legitimate requests from the victim.

The user needs to be logged on (this is not an attack against the authentication layer), and for the CSRF request to succeed, it needs to be properly formed with the appropriate URL and header data such as cookies.

Here’s how OWASP defines the attack and the potential ramifications:

| Threat Agents | Attack Vectors | Security Weakness | Technical Impacts | Business Impact | |

| Exploitability AVERAGE | Prevalence WIDESPREAD | Detectability EASY | Impact MODERATE | ||

| Consider anyone who can trick your users into submitting a request to your website. Any website or other HTML feed that your users access could do this. | Attacker creates forged HTTP requests and tricks a victim into submitting them via image tags, XSS, or numerous other techniques. If the user is authenticated, the attack succeeds. | CSRF takes advantage of web applications that allow attackers to predict all the details of a particular action. Since browsers send credentials like session cookies automatically, attackers can create malicious web pages which generate forged requests that are indistinguishable from legitimate ones. Detection of CSRF flaws is fairly easy via penetration testing or code analysis. | Attackers can cause victims to change any data the victim is allowed to change or perform any function the victim is authorized to use. | Consider the business value of the affected data or application functions. Imagine not being sure if users intended to take these actions. Consider the impact to your reputation. | |

There’s a lot of talk about trickery going on here. It’s actually not so much about tricking the user to issue a fraudulent request (their role can be very passive), rather it’s about tricking the browser and there’s a whole bunch of ways this can happen. We’ve already looked at XSS as a means of maliciously manipulating the content the browser requests but there’s a whole raft of other ways this can happen. I’m going to show just how simple it can be.

Anatomy of a CSRF attack

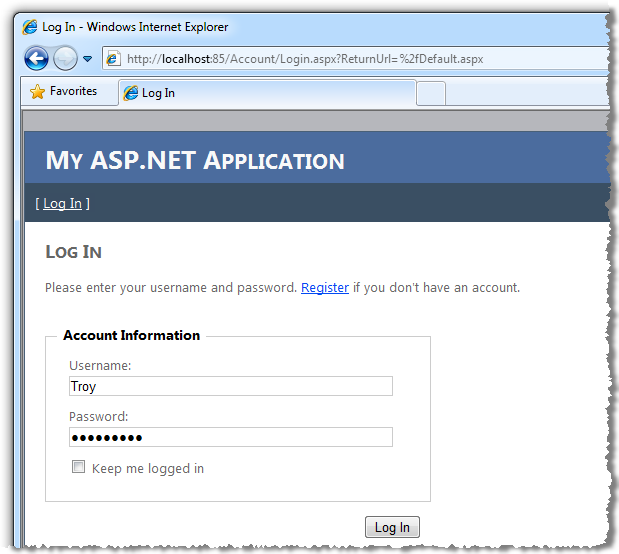

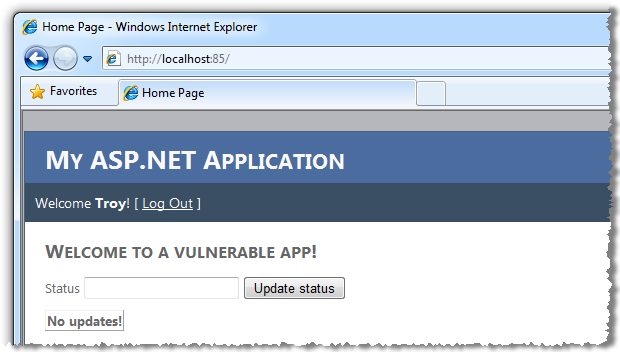

To make this attack work, we want to get logged into an application and then make a malicious request from an external source. Because it’s all the rage these days, the vulnerable app is going to allow the user to update their status. The app provides a form to do this which calls on an AJAX-enabled WCF service to submit the update.

To exploit this application, I’ll avoid the sort of skulduggery and trickery many successful CSRF exploits use and keep it really, really simple. So simple in fact that all the user needs to do is visit a single malicious page in a totally unrelated web application.

Let’s start with the vulnerable app. Here’s how it looks:

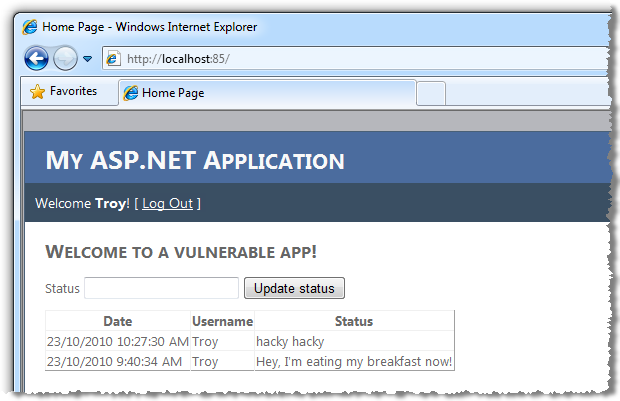

This is a pretty vanilla ASP.NET Web Application template with an application services database in which I’ve registered as “Troy”. Once I successfully authenticate, here’s what I see:

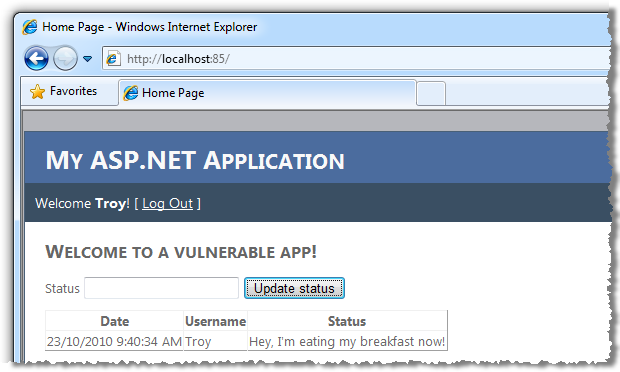

When I enter a new status value (something typically insightful for social media!), and submit it, there’s an AJAX request to a WCF service which receives the status via POST data after which an update panel containing the grid view is refreshed:

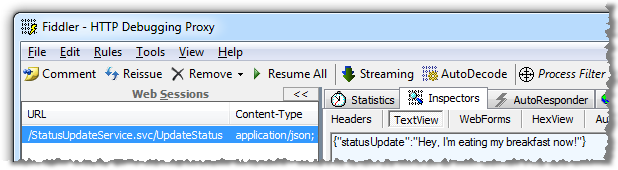

From the perspective of an external party, all the information above can be easily discovered because it’s disclosed by the application. Using Fiddler we can clearly see the JSON POST data containing the status update:

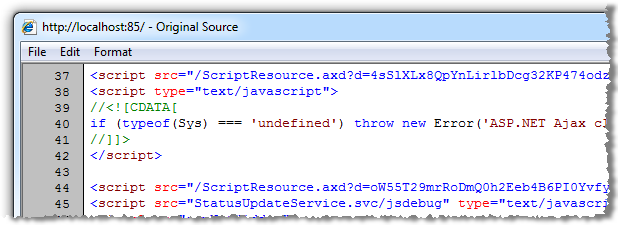

Then the page source discloses the action of the button:

<input type="button" value="Update status" onclick="return UpdateStatus()" />

And the behaviour of the script:

<script language="javascript" type="text/javascript"> // <![CDATA[ function UpdateStatus() { var service = new Web.StatusUpdateService(); var statusUpdate = document.getElementById('txtStatusUpdate').value; service.UpdateStatus(statusUpdate, onSuccess, null, null); }function onSuccess(result) {

var statusUpdate = document.getElementById('txtStatusUpdate').value = "";

__doPostBack('MainContent_updStatusUpdates', '');

}

// ]]>

</script>

And we can clearly see a series of additional JavaScript files required to tie it all together:

What we can’t see externally (but could easily test for), is that the user must be authenticated in order to post a status update. Here’s what’s happening behind the WCF service:

[OperationContract]

public void UpdateStatus(string statusUpdate)

{

if (!HttpContext.Current.User.Identity.IsAuthenticated)

{

throw new ApplicationException("Not logged on");

}var dc = new VulnerableAppDataContext();

dc.Status.InsertOnSubmit(new Status

{

StatusID = Guid.NewGuid(),

StatusDate = DateTime.Now,

Username = HttpContext.Current.User.Identity.Name,

StatusUpdate = statusUpdate

});

dc.SubmitChanges();

}

This is a very plain implementation but it clearly illustrates that status updates only happen for users with a known identity after which the update is recorded directly against their username. On the surface of it, this looks pretty secure, but there’s one critical flaw…

Let’s create a brand new application which will consist of just a single HTML file hosted in a separate IIS website. Imagine this is a malicious site sitting anywhere out there on the web. It’s totally independent of the original site. We’ll call the page “Attacker.htm” and stand it up on a separate site on port 84.

What we want to do is issue a status update to the original site and the easiest way to do this is just to grab the relevant scripts from above and reconstruct the behaviour. In fact we can even trim it down a bit:

<!DOCTYPE html PUBLIC "-//W3C//DTD XHTML 1.0 Transitional//EN"

"http://www.w3.org/TR/xhtml1/DTD/xhtml1-transitional.dtd">

<html xmlns="http://www.w3.org/1999/xhtml">

<head>

<title></title><

script src="http://localhost:85/ScriptResource.axd?d=4sSlXLx8QpYnLirlbD...

<script src="http://localhost:85/ScriptResource.axd?d=oW55T29mrRoDmQ0h2E...

<script src="http://localhost:85/StatusUpdateService.svc/jsdebug" type="...<

script language="javascript" type="text/javascript">

// <![CDATA[

var service = new Web.StatusUpdateService();

var statusUpdate = "hacky hacky";

service.UpdateStatus(statusUpdate, null, null, null);

// ]]>

</script></

head>

<body>

You've been CSRF'd!

</body>

</html>

Ultimately, this page is comprised of two external script resources and a reference to the WCF service, each of which is requested directly from the original site on port 85. All we need then is for the JavaScript to actually call the service. This has been trimmed down a little to drop the onSuccess method as we don’t need to do anything after it executes.

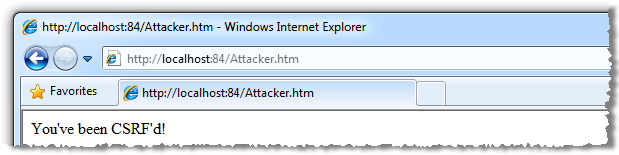

Now let’s load that page up in the browser:

Ok, that’s pretty much what was expected but has the vulnerable app actually been compromised? Let’s load it back up and see how our timeline looks:

What’s now obvious is that simply by loading a totally unrelated webpage our status updates have been compromised. I didn’t need to click any buttons, accept any warnings or download any malicious software; I simply browsed to a web page.

Bingo. Cross site request forgery complete.

What made this possible?

The exploit is actually extremely simple when you consider the mechanics behind it. All I’ve done is issued a malicious HTTP request to the vulnerable app which is almost identical to the earlier legitimate one, except of course for the request payload. Because I was already authenticated to the original site, the request included the authentication cookie so as far as the server was concerned, it was entirely valid.

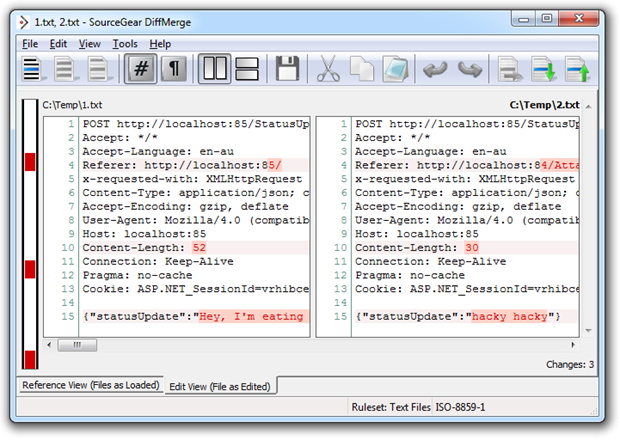

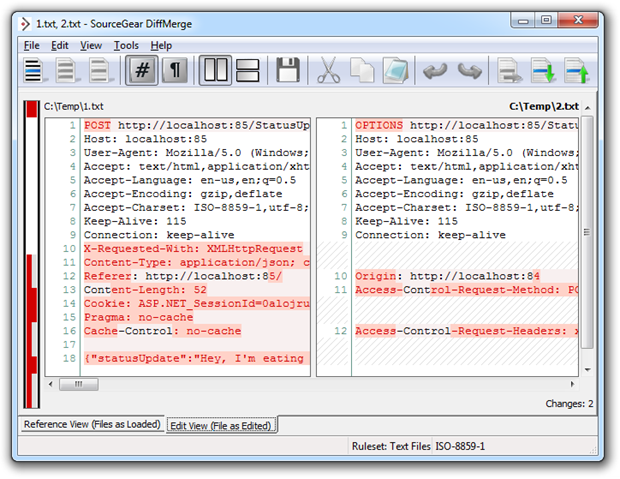

This becomes a little clearer when you compare the two requests. Take a look at a diff between the two raw requests (both captured with Fiddler), and check out how similar they are (legitimate on the left, malicious on the right). The differences are highlighted in red:

As you can see on line 13, the cookie with the session ID which persists the authentication between requests is alive and well. Obviously the status update on line 15 changes and as a result, so does the content length on line 10. From the app’s perspective this is just fine because it’s obviously going to receive different status updates over time. In fact the only piece of data giving the app any indication as to the malicious intent of the request is the referrer. More on that a bit later.

What this boils down to in the context of CSRF is that because the request was predictable, it was exploitable. That one piece of malicious code we wrote is valid for every session of every user and it’s equally effective across all of them.

Other CSRF attack vectors

The example above was really a two part attack. Firstly, the victim needed to load the attacker website. Achieving this could have been done with a little social engineering or smoke and mirrors. The second part of the attack involved the site making a POST request to the service with the malicious status message.

There are many, many other ways CSRF can manifest itself. Cross site scripting, for example, could be employed to get the CSRF request nicely embedded and persisted into a legitimate (albeit vulnerable) website. And because of the nature of CSRF, it could be any website, not just the target site of the attack.

Remember also that a CSRF vulnerability may be exploited by a GET or a POST request. Depending on the design of the vulnerable app, a successful exploit could be as simple as carefully constructing a URL and socialising that with the victim. For GET requests in particular, a persistent XSS attack with an image tag containing a source value set to a vulnerable path causing the browser to automatically make the CSRF request is highly feasible (avatars on forums are a perfect case for this).

Employing the synchroniser token pattern

The great thing about architectural patterns is that someone has already come along and done the hard work to solve many common software challenges. The synchroniser token pattern attempts to inject some state management into HTTP requests by persisting a piece of unknown data across requests. The presence and value of that data can indicate particular application states and the legitimacy of requests.

For example, the synchroniser token pattern is frequently used to avoid double post-backs on a web form. In this model, a token (consider it as a unique string), is stored in the user’s session as well as in a hidden field in the form. Upon submission, the hidden field value is compared to the session and if a match is found, processing proceeds after which the value is removed from session state. The beauty of this pattern is that if the form is re-submitted by refresh or returning to the original form via the back button, the token will no longer be in session state and the appropriate error handling can occur rather than double-processing the submission.

We’ll use a similar pattern to guard against CSRF but rather than using the synchroniser token to avoid the double-submit scenario, we’ll use it to remove the predictability which allowed the exploit to occur earlier on.

Let’s start with creating a method in the page which allows the token to be requested. It’s simply going to try to pull the token out of the user’s session state and if it doesn’t exist, create a brand new one. In this case, our token will be a GUID which has sufficient uniqueness for our purposes and is nice and easy to generate. Here’s how it looks:

protected string GetToken()

{

if (Session["Token"] == null)

{

Session["Token"] = Guid.NewGuid();

}

return Session["Token"].ToString();

}

We’ll now make a very small adjustment in the JavaScript which invokes the service so that it retrieves the token from the method above and passes it to the service as a parameter:

function UpdateStatus() {

var service = new Web.StatusUpdateService();

var statusUpdate = document.getElementById('txtStatusUpdate').value;

var token = "<%= GetToken() %>";

service.UpdateStatus(statusUpdate, token, onSuccess, null, null);

}

Finally, let’s update the service to receive the token and ensure it’s consistent with the one stored in session state. If it’s not, we’re going to throw an exception and bail out of the process. Here’s the adjusted method signature and the first few lines of code:

[OperationContract]

public void UpdateStatus(string statusUpdate, string token)

{

var sessionToken = HttpContext.Current.Session["Token"];

if (sessionToken == null || sessionToken.ToString() != token)

{

throw new ApplicationException("Invalid token");

}

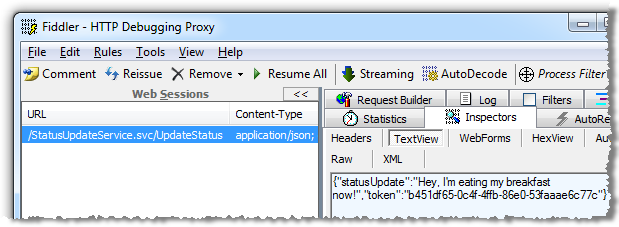

Now let’s run the original test again and see how that request looks:

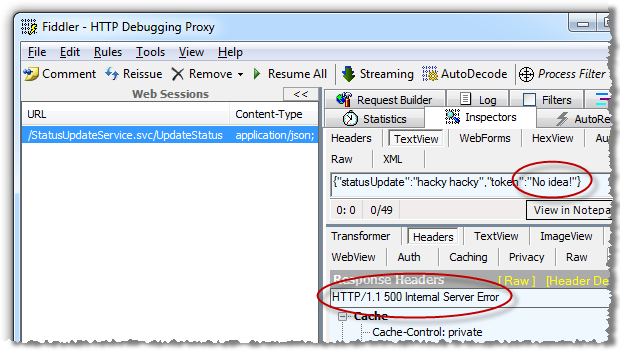

This seems pretty simple, and it is. Have a think about what’s happening here; the service is only allowed to execute if a piece of information known only to the current user’s session is persisted into the request. If the token isn’t known, here’s what ends up happening (I’ve passed “No idea!” from the attacker page in the place of the token):

Yes, the token can be discovered by anyone who is able to inspect the source code of the page loaded by this particular user and yes, they could then reconstruct the service request above with the correct token. But none of that is possible with the attack vector illustrated above as the CSRF exploit relies purely on an HTTP request being unknowingly issued by the user’s browser without access to this information.

Native browser defences and cross-origin resource sharing

All my examples above were done with Internet Explorer 8. I’ll be honest; this is not my favourite browser. However, one of the many reasons I don’t like it is the very reason I used it above and that’s simply that it doesn’t do a great job of implementing native browser defences to a whole range of attack scenarios.

Let me demonstrate – earlier on I showed a diff of a legitimate request issued by completing the text box on the real website next to a malicious request constructed by the attacker application. We saw these requests were near identical and that the authentication cookie was happily passed through in the headers of each.

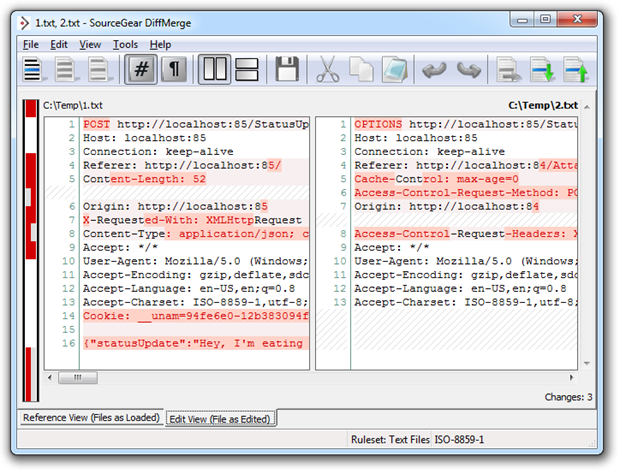

Let’s compare that to the requests created by exactly the process in Chrome 7, again with the legitimate request on the left and the malicious request on the right:

These are now fundamentally different requests. Firstly, the HTTP POST has gone in favour of an HTTP OPTIONS request intended to return the HTTP methods supported by the server. We’ve also got an Access-Control-Request-Method entry as well as an Access-Control-Request-Headers and both the cookie and JSON body are missing. The other thing not shown here is the response. Rather than the usual HTTP 200 OK message, an HTTP 302 FOUND is returned with a redirect to “/Account/Login.aspx?ReturnUrl=%2fStatusUpdateService.svc%2fUpdateStatus”. This is happening because without a cookie, the application is assuming the user is not logged in and is kindly sending them over to the login page.

The story is similar (but not identical) with Firefox:

This all links back to the XMLHttpRequest API (XHR) which allows the browser to make a client-side request to an HTTP resource. This methodology is used extensively in AJAX to enable fragments of data to be retrieved from services without the need to post the entire page back and process the request on the server side. In the context of this example, it’s used by the AJAX-enabled WCF service and encapsulated within one of the script resources we added to the attacker page.

Now, the thing about XHR is that surprise, surprise, different browsers handle it in different fashions. Prior to Chrome 2 and Firefox 3.5, these browsers simply wouldn’t allow XHR requests to be made outside the scope of the same-origin policy meaning the attacker app would not be able to make the request with these browsers. However since the newer generation of browsers arrived, cross-origin XHR is permissible but with the caveat that it’s execution is not denied by the app. The practice of these cross-site requests has become known as cross-origin resource sharing (CORS).

There’s a great example of how this works in the saltybeagle.com CORS demonstration which shows a successful CORS request where you can easily see what’s going on under the covers. This demo makes an HTTP request via JavaScript to a different server passing a piece of form data with it (in this case, a “Name” field). Here’s how the request looks in Fiddler:

Host: ucommbieber.unl.edu

Connection: keep-alive

Referer: http://saltybeagle.com/cors/

Access-Control-Request-Method: POST

Origin: http://saltybeagle.com

Access-Control-Request-Headers: X-Requested-With, Content-Type, Accept

Accept: /

User-Agent: Mozilla/5.0 (Windows; U; Windows NT 6.1; en-US) AppleWebKit/534.7 (KHTML, like Gecko) Chrome/7.0.517.41 Safari/534.7

Accept-Encoding: gzip,deflate,sdch

Accept-Language: en-US,en;q=0.8

Accept-Charset: ISO-8859-1,utf-8;q=0.7,;q=0.3

Note how similar the structure is to the example of the vulnerable app earlier on. It’s an HTTP OPTIONS request with a couple of new access control request headers. Only this time, the response is very different:

Date: Sat, 30 Oct 2010 23:57:57 GMT

Server: Apache/2.2.14 (Unix) DAV/2 PHP/5.3.2

X-Powered-By: PHP/5.3.2

Access-Control-Allow-Origin: *

Access-Control-Allow-Methods: GET, POST, OPTIONS

Access-Control-Allow-Headers: X-Requested-With

Access-Control-Max-Age: 86400

Content-Length: 0

Keep-Alive: timeout=5, max=100

Connection: Keep-Alive

Content-Type: text/html; charset=utf-8

This is what would be normally be expected, namely the Access-Control-Allow-Methods header which tells the browser it’s now free to go and make a POST request to the secondary server. So it does:

Host: ucommbieber.unl.edu

Connection: keep-alive

Referer: http://saltybeagle.com/cors/

Content-Length: 9

Origin: http://saltybeagle.com

X-Requested-With: XMLHttpRequest

Content-Type: application/x-www-form-urlencoded

Accept: /

User-Agent: Mozilla/5.0 (Windows; U; Windows NT 6.1; en-US) AppleWebKit/534.7 (KHTML, like Gecko) Chrome/7.0.517.41 Safari/534.7

Accept-Encoding: gzip,deflate,sdch

Accept-Language: en-US,en;q=0.8

Accept-Charset: ISO-8859-1,utf-8;q=0.7,;q=0.3

name=Troy

And it receives a nicely formed response:

Date: Sat, 30 Oct 2010 23:57:57 GMT

Server: Apache/2.2.14 (Unix) DAV/2 PHP/5.3.2

X-Powered-By: PHP/5.3.2

Access-Control-Allow-Origin: *

Access-Control-Allow-Methods: GET, POST, OPTIONS

Access-Control-Allow-Headers: X-Requested-With

Access-Control-Max-Age: 86400

Content-Length: 82

Keep-Alive: timeout=5, max=99

Connection: Keep-Alive

Content-Type: text/html; charset=utf-8

Hello CORS, this is ucommbieber.unl.edu

You sent a POST request.

Your name is Troy

Now test that back to back with Internet Explorer 8 and there’s only one request with an HTTP POST and of course one response with the expected result. The browser never checks if it’s allowed to request this resource from a location other than the site which served the original page.

Of course none of the current crop of browsers will protect against a GET request structured something like this: http://localhost:85/StatusUpdateService.svc/UpdateStatus?statusUpdate=Hey, I'm eating my breakfast now! It’s viewed as a simple hyperlink and the CORS concept of posting and sharing data across sites won’t apply.

This section has started to digress a little but the point is that there is a degree of security built into the browser in much the same way as browsers are beginning to bake in protection from other exploits such as XSS, just like IE8 does. But of course vulnerabilities and workarounds persist and just like when considering XSS vulnerabilities in an application, developers need to be entirely proactive in protecting against CSRF. Any additional protection offered by the browser is simply a bonus.

Other CSRF defences

The synchroniser token pattern is great, but it doesn’t have a monopoly on the anti-CSRF patterns. Another alternative is to force re-authentication before processing the request. An activity such as demonstrated above would challenge the user to provide their credentials rather than just blindly carrying out the request.

Yet another approach is good old Captcha. Want to let everyone know what you had for breakfast? Just successfully prove you’re a human by correctly identifying the string of distorted characters in the image and you’re good to go.

Of course the problem with both these approaches is usability. I’m simply not going to log on or translate a Captcha every time I Tweet or update my Facebook status. On the other hand, I’d personally find this an acceptable approach if it was used in relation to me transferring large sums of money around. Re-authentication in particular is a perfectly viable CSRF defence for financial transactions which occur infrequently and have a potentially major impact should they be accessed illegally. It all boils down to finding a harmonious usability versus security balance.

What won’t prevent CSRF

Disabling HTTP GET on vulnerable pages. If you look no further than CSRF being executed purely by a victim following a link directly to the vulnerable site, sure, disallowing GET requests if fine. But of course CSRF is equally exploitable using POST and that’s exactly what the example above demonstrated.

Only allowing requests with a referrer header from the same site. The problem with this approach is that it’s very dependent on an arbitrary piece of information which can be legitimately manipulated at multiple stages in the request process (browser, proxy, firewall, etc.). The referrer may also not be available if the request originates from an HTTPS address.

Storing tokens in cookies. The problem with this approach is that the cookie is persisted across requests. Indeed this was what allowed the exploit above to successfully execute – the authentication cookie was handed over along with the request. Because of this, tokenising a cookie value offers no additional defence to CSRF.

Ensuring requests originate from the same source IP address. This is totally pointless not only because the entire exploit depends on the request appearing perfectly legitimate and originating from the same browser, but because dynamically assigned IP addresses can legitimately change, even within a single securely authenticated session. Then of course you also have multiple machines exposing the same externally facing IP address by virtue of shared gateways such as you’d find in a corporate scenario. It’s a totally pointless and fatally flawed defence.

Summary

The thing that’s a little scary about CSRF from the user’s perspective is that even though they’re “securely” authenticated, an oversight in the app design can lead to them – not even an attacker – making requests they never intended. Add to that the totally indiscriminate nature of who the attack can compromise on any given site and combine that with the ubiquity of exposed HTTP endpoints in the “Web 2.0” world (a term I vehemently dislike, but you get the idea), and there really is cause for extra caution to be taken.

The synchroniser token pattern really is a cinch to implement and the degree of randomness it implements significantly erodes the predictability required to make a CSRF exploit work properly. For the most part, this would be sufficient but of course there’s always re-authentication if that added degree of request authenticity is desired.

Finally, this vulnerability serves as a reminder of the interrelated, cascading nature of application exploits. CSRF is one those which depends on some sort of other exploitable hole to begin with whether that be SQL injection, XSS or plain old social engineering. So once again we come back to the layered defence approach where security mitigation is rarely any one single defence but rather a series of fortresses fending off attacks at various different points of the application.

Resources

- Cross-Site Request Forgery (CSRF) Prevention Cheat Sheet

- The Cross-Site Request Forgery (CSRF/XSRF) FAQ

- HttpHandler with cross-origin resource sharing support