It’s a hot summer day in Perth over on the western seaboard of Australia and the local pub is packed with patrons downing cold beers. You’re in your shiny new Ferrari – red, of course – and come cruising past the pub in full view of the enthralled audience. As any red-blooded, testosterone fuelled Aussie bloke would do, you give the Italian thoroughbred a full redline launch to the delight of the crowd. Right up until you run into the street sign:

Why did this happen? Well there’s the fact the guy was allegedly drunk which is never a good idea in any car, let alone a supercar with a few hundred kilowatts. But there’s also an interesting element of the story captured in the news article:

Witnesses said the driver revved the Italian stallion at the lights next to the Windsor Hotel, at the intersection of Mends Street and Mill Point Rd, dropped the clutch and burned off around the corner

Ah, dropped the clutch and burning off says our inebriated show pony hit the button with the car and the squiggly lines✝:

As many car manufactures now do, Ferrari fitted the 360 with traction control. Left on, which is the default, loss of traction will cut power to the wheels allowing the vehicle (and hopefully the driver) to regain control and continue on without causing a scene. However, turn it off and suddenly the driver has to take a whole lot more responsibility for their actions because the safety net is gone. This is precisely what Microsoft did when they introduced Request Validation to .NET.

✝ Ok, I’m wildly speculating for the sake of edutainment. And yes trainspotters, I referenced “dropping the clutch” then used an image of the F1 gearbox which obviously doesn’t have a manually actuated clutch. Edutainment!

About request validation

Back in the days of classic ASP, pretty much any data could readily be sent to the server through input mechanisms such as form fields and query strings without any parsing beyond what the competent developer would apply. And here’s your first problem; the term “competent developer” is a little like “common sense” in that neither are that common. Many devs simply did not (and still do not) understand what needs to be parsed and what the potential ramifications are when it doesn’t happen.

Why is request validation even important? Put simply, because you want to control the information which gets sent to your application and potentially stored or redisplayed. Failure to do this opens up a whole range of potential exploits to those with malicious intent.

XSS

Before I delve into specific vulnerabilities, I want to quickly draw attention to the Open Web Application Security Project or OWASP for short. OWASP defines a “Top 10” of the common web application security risks and has become the de facto guideline for all web developers conscious of security implications in their apps.

Onto the vulnerabilities; one of the most common exploits that take advantage of missing input validation is Cross Site Scripting or XSS. Here’s what OWASP has to say about XSS:

XSS flaws occur whenever an application takes untrusted data and sends it to a web browser without proper validation and escaping. XSS allows attackers to execute script in the victim’s browser which can hijack user sessions, deface web sites, or redirect the user to malicious sites.

Let’s break this down a little. “Untrusted data” refers to data sent to the application from third parties outside those explicitly trusted by the application, for example form and querystring inputs. “Proper validation and escaping” involves ensuring the data, although untrusted, is consistent with what is expected from an external party.

Worked examples

XSS exposes itself in a number of different forms but the most commonly seen and easily identifiable exploit involves rewriting querystring parameters directly to the page without validating or escaping characters. Here’s a classic example of what immediately appears to be a potentially vulnerable URL:

http://troyhunt.com/Default.aspx?Name=Troy

In this use case, my name would appear somewhere within the page. Changing the querystring value to “John”, would change the name displayed on the page accordingly. This in itself may pose a vulnerability (direct rewriting of page content) but there are cases where this is valid. It’s the next step in the discovery phase which unearths the vulnerability. What if we changed the URL as follows:

http://troyhunt.com/Default.aspx?Name=Tr<script>alert(‘Hello’);</script>oy

There are three likely scenarios:

- The page will identify that a script tag is not valid input for the querystring parameter and the application will reject the request.

- The page will display the name exactly as it is entered in the querystring by escaping the non alpha numeric characters and rendering them in HTML as Tr%3Cscript%3Ealert(%91Hello%92);%3C/script%3Eoy which will then display on the page precisely as entered in the querystring.

- The page will display the name “Troy” and you will get a JavaScript alert saying “Hello”.

This example is seemingly harmless but stop and think for a moment what is going on in the third scenario. You are controlling not only the HTML content which gets rendered to the browser but the script which executes within it. Let’s run through the possibilities for a moment:

- You can rewrite the page contents by closing the tag where the text is displaying and entering your own.

- You can insert CSS which changes the layout of the page and substitutes it with your own.

- You can write tags which look like username and password fields with a submit button which sends the credentials to your server.

- You can insert an IFrame which loads malware from your server and prompts the user to install it.

- You can access the user’s cookies for the site (which may include sensitive data if poorly designed) and submit them to your own server.

- You can do all of the above in external .js or .css files by including external references.

There are some great examples in the Cross Site Scripting FAQ over on the cgisecurity.com site which talks about XSS in more detail and provides some excellent references if you want to see how much further the exploit can be pushed.

What makes these exploits particularly cunning is that as far as the user is concerned, they’re visiting a legitimate site. The domain looks valid and they’re actually seeing what they probably expected to, there’s just other stuff going on in the background.

Social engineering

In the example above, there is a dependency on someone loading a maliciously formed URL. There are other XSS exploits which are more subvert, such as successfully injecting the examples into a database which then renders them to unsuspecting users, but let’s focus on this one for the purposes of this post.

Tricking someone into following an exploited URL is simply a matter of social engineering. This term refers to manipulating the behaviour of an individual so that they perform an action which may not be overtly dangerous. It’s simply trickery on behalf of the party with malicious intent.

Encouraging the loading of a malicious URL could be done by distributing it through popular channels, such as social networking, with the promise of an alluring website. If the unsuspecting party can be convinced the website is trustworthy, often because the domain and website content both appear legitimate, then the battle is halfway over. It’s then a matter of exercising any number of the potential XSS exploits.

.NET request validation

The entire preamble so far was so that the XSS technique and potential exploit was clear. As I said earlier on, in the old days of classic ASP the exploits above could easily be exercised if the developer hadn’t explicitly parsed input. Then along came .NET and with it the concept of Request Validation.

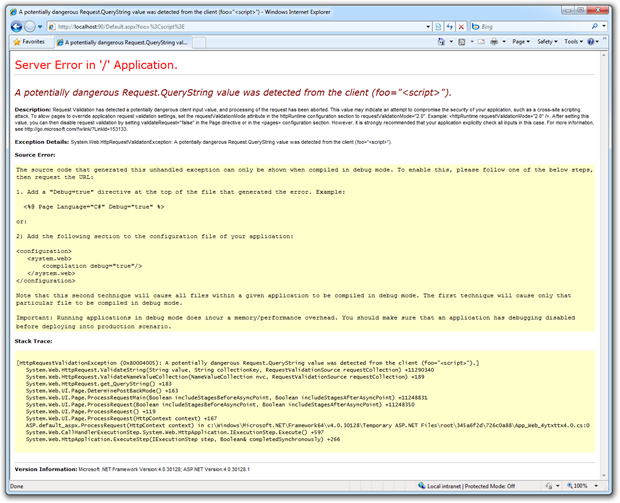

The beauty of Request Validation is that like the Ferrari’s traction control, it’s just there. You don’t have to turn it on, you don’t have to write code, and in fact you don’t even need to know it exists. It just works. To demonstrate this, here’s an entirely random querystring on a legitimate .NET page with custom errors turned off:

In this case there was no vulnerability to potentially exploit but Request Validation rolled out the safety net and identified a potentially malicious request anyway. This is a very good thing because no matter how (in)competent the developer is, this particular XSS mechanism cannot be exploited by many common string manipulation techniques.

However, there is a downside to the catchall approach. There are legitimate purposes for posting HTML tags to the server. One very common example of this is rich text editors. Many of these allow for editing in WYSIWYG or directly in HTML and as such this becomes a use case for legitimately posting HTML formatted strings to the server. However, just like the traction control button, Request Validation can be simply turned off and it can be done at the page level:

<%@ Page Language="C#" ValidateRequest="false" %>

Whilst this leaves that particular page vulnerable if no manual input parsing is performed, the scope is limited and all other pages still benefit from the fall gamut of Request Validation protection. The other alternative is to turn off Request Validation across the entire application which leaves the web.config looking like this:

<configuration> <system.web> <pages validateRequest="false" /> </system.web> </configuration>

To get a sense of how easy it is to slip up when no global input validation exists, take a look at A XSS Vulnerability in Almost Every PHP Form 'I’ve Ever Written. Or XSS Woes for a similar experience. Very innocuous development practices, very big XSS holes.

The DotNetNuke story

For those not already familiar with this product, DotNetNuke is an open source, .NET based content management system that has now been around in different forms for about 8 years. It’s achieved a strong following by those wanting to quickly and easily deploy CMS and portal style functionality in the .NET realm without the financial and administrative burden of SharePoint.

Being a content management system, there are a lot of places where text, rich text at that, needs to be submitted to the server. A decision appears to have been made to completely disable Request Validation at the DotNetNuke application level as demonstrated in the web.config file above. What this means is that the native protection described above is gone – the traction control is permanently off - and if the developer adds new features and hasn’t explicitly coded against the potential for an exploit, they could be vulnerable.

Unfortunately for DotNetNuke, in November 2009 they identified a vulnerability with their own codebase which spans versions 4.8 through 5.1.4 or to put it another way, nearly two years worth of revisions. This is a significant period of time and as such, a significant number of web applications exist out there with this vulnerability. In fact I found many vulnerable sites after just a few minutes of running carefully crafted Google searches.

The bottom line is that many DotNetNuke sites now sit out in the publicly facing domain with a very easily compromised exploit. This happens in part because the input on the search page was not correctly parsing input but this alone would not have been a problem if Request Validation hadn’t been turned off.

Design utopia

When I came across a site with the vulnerability described above I fired out a quick tweet and got an interesting response. The feedback from DotNetNuke Corp was that .NET Request Validation was a “crude filter” and that it didn’t make sense in a product allowing online editing of HTML.

What Joe was trying to say (and elaborated on in subsequent tweets), was that Request Validation doesn’t provide the same fidelity as custom parsing of request content. And he’s absolutely right. I got similar feedback from a query on Stack Overflow with the response explaining how global input validation “makes programmers lazy” and that they “must be responsible for the vulnerabilities they create”. And I couldn’t agree more.

The problem with the feedback above is that it’s like saying we should just teach people to drive properly in the first place and forget about the traction control. Yes, we should and it’s an admirable pursuit but it’s simply not going to happen and we’ll need to continue catering for those with ambition that exceeds ability. In a utopian world, all developers would understand the OWASP Top 10 and wouldn’t introduce vulnerabilities into their code. But this just doesn’t happen which is why we have Request Validation along with web server level defences such as IIS UrlScan and browser level defences like the IE8 XSS Filter.

Unfortunately the reality is that people will continue to crash cars they are unequipped to handle and developers will keep writing vulnerable code because they don’t understand fundamental security concepts. Just as our friend in the Ferrari had the traction control button, we have a safety net for developers building on ASP.NET. If you turn either of these off, you’d better really know what you’re doing because you’re now on your own without a safety net.