The last week hasn’t been particularly kind to ASP.NET, and that’s probably a more than generous way of putting it. Only a week ago now, Scott Guthrie wrote about an Important ASP.NET Security Vulnerability; the padding oracle exploit. I watched with interest as he was flooded with a barrage of questions (316 as of now) and realised that whilst he’d done his best to explain the mitigation, he obviously had constraints around explaining why it was necessary.

I wrote about Fear, uncertainty and the padding oracle exploit in ASP.NET the day after in an attempt to shed some light on why Scott’s recommendations made good sense, even if they seemed a bit odd on the surface of it. I talked about how the custom error process obfuscated the underlying error message and how this offered a heightened level of security and I touched very briefly on his guidance about response rewriting and random sleep periods.

Now I want to demonstrate why random sleep periods are important, why response rewriting is essential and finally, why older versions of the .NET framework leave you more at risk than the newer releases.

Sep 29th update: The patch and how to test for it in Do you trust your hosting provider and have they really installed the padding oracle patch?

Objective (and anti-objective)

No doubt there has been a rush to mitigate the vulnerability through the custom error configuration, but there seems to be less enthusiasm to implement the random sleep duration. This may be partly because there is a framework version dependency, but I also suspect it’s partly because the value proposition isn’t clear.

What I want to do in this post is demonstrate that different internal error types may demonstrate different observable timing patterns. In short, some error types take longer to return a response than others. By doing this I hope to encourage people to implement Scott’s random timing recommendation on the basis that there’s merit to the timing attack concern.

What I’m not going to do in this post – and let me ensure I’m not setting a false expectation right now - is to demonstrate a timing attack in action against the padding oracle exploit. This involves not only being able to demonstrate the padding exploit when it has the server error codes to play with but then being able to lay the timing vector on top. We know the padding exploit exists; all we need to do now is demonstrate that there could indeed be a timing vector involved and that alone should be sufficient to encourage pro-activeness.

Attacking from the side

We can’t talk about sleep patterns until we understand side channel attacks. A side channel attack is a more subtle way of breaching a system than, say, a brute force attack which tends to be a fairly aggressive process. In a side channel attack, the focus is more on observing the behaviour of a system under different scenarios and listening passively than trying to break through with a sledgehammer:

A side channel attack is any attack based on information gained from the physical implementation of a cryptosystem, rather than brute force or theoretical weaknesses in the algorithms

Side channel attacks can come at a system from a number of different angles. For example, the power consumption of a cryptographic device can disclose the internal operation through analysing the power usage. Under different states in the decryption process, a device may draw a different current which discloses information about the implementation and provides leverage to begin an attack.

In a timing attack, it’s the duration of different states in the decryption process that is monitored. In relation to the padding oracle vulnerability, if an observable pattern can be established to distinguish between different types of internal error – regardless of the information presented to the end user – a timing attack may be possible.

In a timing attack, it’s the duration of different states in the decryption process that is monitored. In relation to the padding oracle vulnerability, if an observable pattern can be established to distinguish between different types of internal error – regardless of the information presented to the end user – a timing attack may be possible.

There’s a school of thought that says timing attacks from remote locations (i.e. across the web rather than sitting next to the device), are impractical due the precision with which time needs to be measured being adversely impacted by the variability of network latency. In fact it was only a couple of months back that OAuth and OpenID came under scrutiny and the defence claimed “a timing attack would not succeed because traffic response times would always fluctuate”. The same article then goes on to explain that the attack was indeed successfully performed remotely so obviously there is a real possibility this technique is practical across the web.

The gory details

As I said earlier, I’m not going to demonstrate a successful timing attack against the padding oracle vulnerability. What I am going to do is show how it may be feasible. Timing attacks can become rather complex affairs as Timing Attacks on Implementations of Diffie-Hellman, RSA, DSS, and Other Systems shows. Let me put it this way; here’s the formula under “Simplifying the attack”:

I always did pretty well in advanced mathematics at school and university but this goes way beyond my comprehension – and it’s the simple version! Let’s keep it basic and just focus on observable time-based error behaviour.

The test parameters

If we can demonstrate that different ASP.NET errors follow different timing patterns, we can prove that obfuscating the underlying error message alone may not be sufficient to protect against the padding oracle exploit. More specifically, it would give credence to Microsoft’s advice to introduce randomness to the error’s response time.

The simplest way to demonstrate this is to stand up a local ASP.NET app then make n requests to pages returning different error types and observe the duration patterns. In the following test, the app is a straight out of the box Visual Studio 2010 ASP.NET Empty Web Application template running against .NET4, compiled for release with debugging set to “false” and running in a local IIS instance on my x64 Win 7 machine. The custom errors are configured just the way Microsoft would like it in response to the padding oracle issue:

<customErrors mode="On" redirectMode="ResponseRewrite"

defaultRedirect="~/Error.aspx"/>

I’m going to test several different URIs in the website by building a dedicated console app which uses the HttpWebRequest class to make consecutive requests. Before calling GetResponse on the request I’m going to start a stopwatch instance then make the request, stop the stopwatch and capture the duration in ticks. Here’s how it looks:

var req = (HttpWebRequest)WebRequest.Create(url); var sw = new Stopwatch(); sw.Start(); var resp = (HttpWebResponse)req.GetResponse(); resp.Close(); sw.Stop(); var duration = sw.ElapsedTicks;

I’ll run this 1,500 times for each test, discard the results outside one standard deviation of the mean then take the first 1,000 remaining durations. This gives a reasonably consistent sample set that should illustrate an observable pattern.

One last thing; this test is going to deal with very, very small units of time so anything using any resources on the system gets killed, all apps other than the test exe are closed and the wifi is off and no devices are connected.

The test paths

Going back to the objective, what I want to do is identify a pattern in the timing of different error types that might suggest, over and beyond what the response discloses, what the underlying error was. The ASP.NET padding oracle targets two scenarios as possible outcomes when the ciphertext in the WebResource.axd is manipulated:

- A System.Security.Cryptography.CryptographicException when the padding is invalid. I’m going to make a request to WebResource.axd with a complete “d” value in the query string from another app rendering the ciphertext invalid in the context of the test app as it was encoded with a different key.

- A 404 when the padding is valid but the decrypted string points to a resource which doesn’t exist. In this instance I’m just going to request WebResource.axd directly without any query string parameters which will cause it to return 404. Ideally I’d have a (non-existent) resource ID encoded into the parameter but this test would only work if I’d successfully exploited at least one byte of the initialisation vector which is a step too far for this post.

This isn’t perfect, but it’s enough to demonstrate the potential for a side channel attack based on duration. Let’s run it!

The results

As I mention earlier, I killed every single possible process on the PC before running this. The tests were captured into a collection in-memory than after the whole thing finished, the result set was written to a text file and sucked into Excel. I then shut everything down and ran it against the second URL and repeated the process. I did the same test half a dozen times over and got results that were consistent enough to be comfortable that there was a pattern.

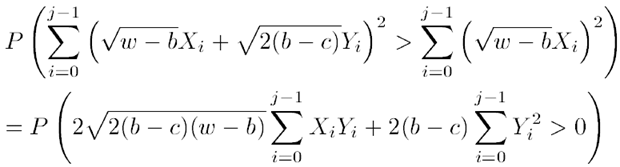

Below is the result. The red series is the cryptographic exception and the blue one the 404. The scale on the left is measured in ticks (one ten-thousandth of a millisecond) and the numbers you’re seeing (click to enlarge), range from 2,500 ticks to 3,500 ticks:

Obviously there’s a pattern here. Over the 1,000 samples in the graph, the cryptographic error averaged 3,090 ticks whilst the 404 came in at 2,802 ticks. In other words, this test has observed a 10% increase in duration when the padding is invalid.

I’m the first to admit that 288 ticks (put that in perspective – there’s 35 of these in a millisecond), seems almost infinitesimal. Still, there’s no denying there’s a pattern and there’s no doubt that Scott Guthrie has a concern over timing attacks and as we saw earlier in the OAuth and OpenID reference, remote timing attacks are a real possibility. I don’t know precisely how these are executed across the ravages of the internet whilst still maintaining enough fidelity to identify patterns, but I do know it’s theoretically possible and worth protecting against.

The fix

Scott’s guidance is clear and simple; do this in the error page:

byte[] delay = new byte[1]; RandomNumberGenerator prng = new RNGCryptoServiceProvider(); prng.GetBytes(delay); Response.Write(delay[0]); Thread.Sleep((int)delay[0]); IDisposable disposable = prng as IDisposable; if (disposable != null) { disposable.Dispose(); }

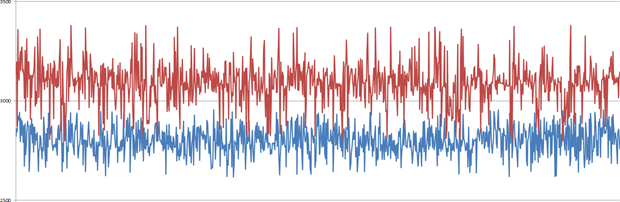

The bottom line is that the sleep period is entirely random and introduces anywhere from an additional 0 to 255 milliseconds to the duration of the error page load time. Considering the timing differences we identified earlier, even just a few milliseconds of randomness should do it. Here’s the results look afterwards:

I actually had to generate this graph from 850 samples rather than 1,000 because even after 1,500 enumerations, one standard deviation from the mean gobbled up a lot of results with such a large degree of randomness. There’s obviously a clear advantage to be gained here; gone is the identifiable pattern of the previous test and instead there’s absolutely no definable pattern left.

It’s free, it doesn’t require a recompile if you implement it within the ASPX page and the advantage is clear. There’s only one caveat…

Why earlier generation ASP.NET remains at risk

In Scott’s post, he talks about simply turning on custom errors and defining a default redirect (to an HTML page, at that) in pre-.NET3.5 SP1 apps but in the newer versions, he talks about using response rewriting and introducing the random sleep. What he doesn’t explicitly say is that due to the earlier versions’ lack of ability to support response rewriting, they remain exposed to timing attacks.

The difference between these two redirect modes is this; a response redirect means that when a page throws an error, the server immediately sends an HTTP 302 found to the browser with a “location” attribute in the header which causes the browser to then make a separate request to this address which in the context of custom errors, is the actual error page itself. When response rewriting is used, the error page is returned directly from the request which caused the error with an HTTP 200 OK status.

The significance with regards to random sleep periods is that response rewriting means the error page must be parsed before any response is returned to the browser whilst response redirect sends an immediate response after which the browser makes a separate request to the error page. A random sleep at this stage would be pointless as the server has already responded, hence Scott’s reference to using just a plain old HTML page in this mode.

Of course what all of this boils down to is that earlier versions of .NET are at greater risk to the padding oracle exploit through timing attacks. I tried to pin Scott down in a comment regarding the benefit of upgrading but he was reticent to commit to suggesting this route. I understand his position and that he’s between a rock and a hard place when it comes to making recommendations about major changes to applications, but by the same token, he’s gone to the trouble of explicitly recommending additional mitigation for newer versions of the framework and there is obviously a basis for his guidance.

Summary

Although it’s not entirely clear precisely how a timing attack would exploit the padding oracle vulnerability across all the vagaries of the internet, what is clear is that there is a basis of concern for this attack vector. Not just because it’s been proven in other contexts but because Microsoft has gone to the trouble of issuing specific guidance to avoid precisely this type of attack.

Frankly, I see this as a bit of a crisitunity; it’s a good time to finally bring those old apps into current generation technology and take advantage of the response rewriting and random sleeps. Yes, I know it means a recompile, re-deployment and retesting but then again, the latest advice from Scott today in his Update on ASP.NET Vulnerability about installing UrlScan and configuring a custom scan rule is something most people won’t do without a good deal of testing anyway. Server installs tends to be taken a bit more seriously than just making a small web.config change.

That’s a good place to wrap up; this vulnerability is still playing out and the goalposts are already moving after only one week. As always, application security needs to get applied in layers as history shows there’s a tendency for them to get pealed back over time. The random sleep has merit as does the custom error configuration and the URL scanning. For my money, I’d be applying all of these liberally and enthusiastically.

Resources

- Timing Attacks on Implementations of Diffie-Hellman, RSA, DSS, and Other Systems (PDF)

- Remote Timing Attacks are Practical (PDF)